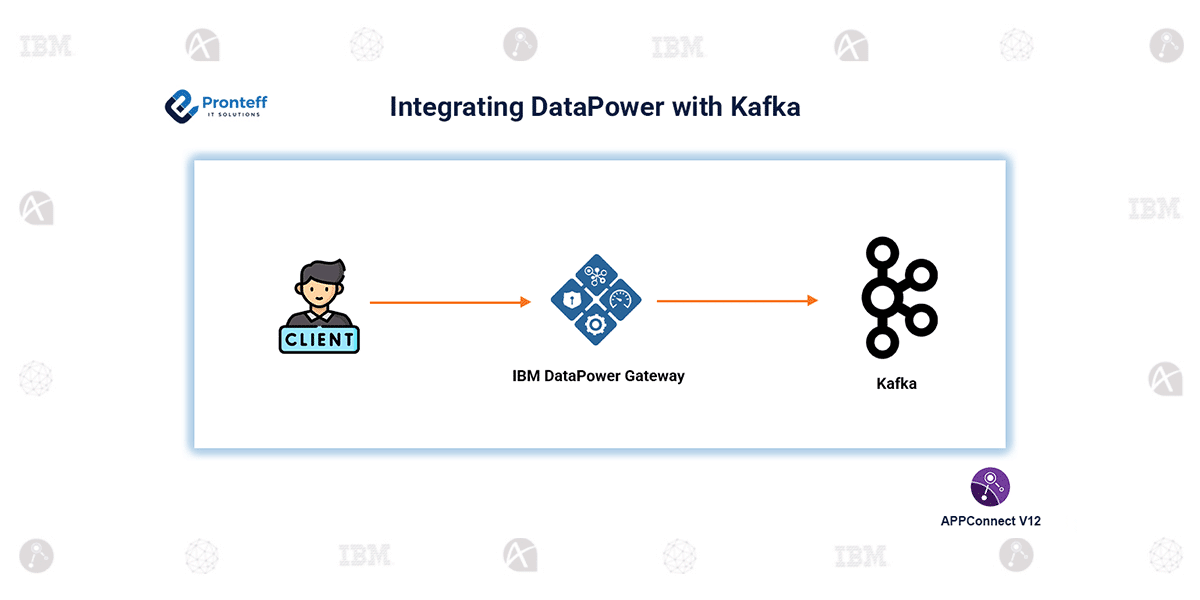

Integrating DataPower with Kafka

In this blog, we will learn how to integrate DataPower with Kafka.

Seamless Data Exchange with DataPower and Kafka

Imagine a system where messages flow securely and instantly from one application to many others—that’s what happens when IBM DataPower meets Kafka. DataPower acts as the gatekeeper, validating and transforming messages before sending them to Kafka topics. The Kafka cluster then ensures every message reaches its destination reliably, with brokers in sync and replicas up-to-date. This setup enables real-time, fault-tolerant communication across multiple backend systems, making data exchange faster, smarter, and more secure.

Why Integrate DataPower with Kafka?

IBM DataPower is a security and integration gateway that excels at validating, transforming, and routing messages. Kafka, on the other hand, is a distributed messaging platform designed for high-throughput, fault-tolerant, and real-time data streaming. By integrating DataPower with Kafka, organisations can achieve:

- Secure message handling with validation and transformation.

- Real-time delivery of data to multiple systems.

- Scalable architecture that supports growing data volumes.

- Fault-tolerant communication with Kafka’s replication and cluster features.

Step-by-Step Workflow

- Receive and Validate: DataPower accepts the message and checks its schema and structure.

- Transform: The message is converted to the required format if needed.

- Publish to Kafka: Using the Kafka Object, DataPower sends the message to the configured topic.

- Kafka Distribution: Kafka brokers replicate the message and distribute it to subscribed consumers.

- Processing by Consumers: Backend systems process messages in real-time for further operations.

STEPS FOR INTEGRATING

- First, start the Zookeeper

The zookeeper manages and coordinates the Kafka cluster. Start it first because Kafka relies on Zookeeper for maintaining broker metadata.

Ensure the service is running correctly before starting Kafka.

- Start the Kafka Server

Start the Kafka broker to enable topic creation and message publishing.

Verify the broker is listening on the correct advertised host and port.

Confirm Kafka can communicate with Zookeeper successfully.

3. Create an mpgw in DataPower

Create an MPGW to receive incoming client requests securely.

This acts as the entry point for messages before sending them to Kafka.

4. Create a cluster object in DataPower

Define the Kafka cluster connection details (bootstrap servers, security, timeouts).

This object allows for publishing messages directly to Kafka topics.

Link this cluster object inside your MPGW processing policy

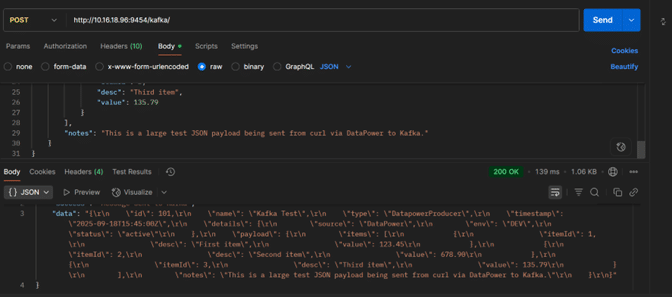

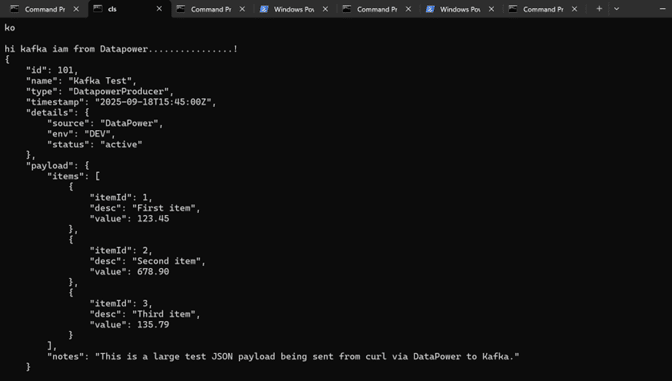

5. Testing: Initiating the Request

KAFKA SERVER RECEIVED PAYLOAD VIA DATAPOWER SUCCESSFULLY.

CONCLUSION:

Integrating with Kafka creates a robust, secure, and scalable data pipeline. Messages flow seamlessly from clients to multiple backend systems in real-time, improving operational efficiency and enabling fault-tolerant, high-throughput communication. This integration empowers organisations to build modern, event-driven architectures that can handle the demands of today’s digital landscape.