OpenShift incorporates an ambient Service Mesh and scalable GitOps

In this blog, we will learn about how OpenShift incorporates an ambient service mesh and scalable GitOps.

Kubernetes serves as the backbone of the cloud-native strategy for modern application deployment. However, successfully managing an application’s full lifecycle — including building, securing, deploying, monitoring, and operating — demands more than just container orchestration. Now, imagine a platform that extends well beyond basic orchestration capabilities. Red Hat OpenShift embodies this concept by offering a robust application platform built to handle every phase of the application lifecycle. It integrates over a decade of open source advancements from the Cloud Native Computing Foundation (CNCF) to deliver a unified experience. OpenShift supports not only containerized workloads but also virtual machines, making it possible to run applications seamlessly across on-premises data centers, public clouds, bare metal environments, and even edge locations.

Red Hat OpenShift streamlines and automates the management of Kubernetes and key technologies from the Cloud Native Computing Foundation (CNCF), helping organizations optimize resource use and scale their applications more effectively. This leads to faster innovation, enhanced security, and improved operational performance. Whether you’re updating existing systems, developing modern cloud-native applications, or deploying workloads at the edge, OpenShift delivers the essential tools and infrastructure to support your goals.

Expanding on its robust platform, Red Hat OpenShift introduces ambient mode as a technology preview in OpenShift Service Mesh 3 — a groundbreaking approach that streamlines the service mesh control plane by removing the need for sidecar proxies. Additionally, OpenShift GitOps now features a new Argo CD agent, enabling a multicluster GitOps control plane. This enhancement supports a scalable, security-centric framework for managing applications and Kubernetes configurations across a fleet of clusters.

Streamlining OpenShift Service Mesh with Ambient Mode

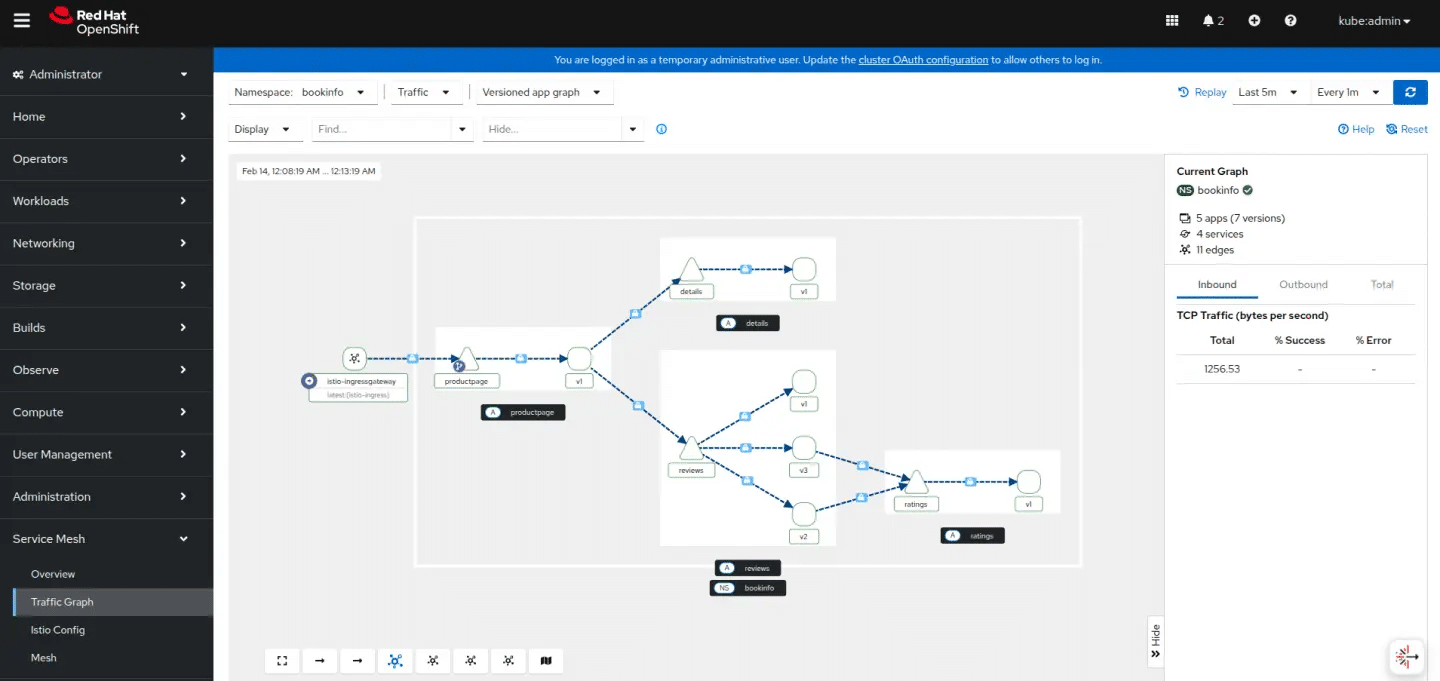

Service meshes play a vital role in modern cloud-native environments by securing and managing interactions between microservices. The recent release of OpenShift Service Mesh 3.0 emphasizes closer alignment with the upstream Istio project and improved support for multicluster environments. With Istio’s multicluster capabilities, organizations can extend a single service mesh across multiple OpenShift clusters, enabling high availability and centralized management. This setup is especially beneficial for deployments spread across clusters, availability zones, or geographic regions under a unified administrative team.

Istio traditionally relies on sidecars—programmable proxies automatically deployed alongside application containers—to deliver key features such as security, traffic control, and observability without modifying application code. While this model provides extensive functionality, it also introduces resource overhead due to the additional sidecar running with each application pod. In scenarios where performance and efficiency are critical, this extra load may not be acceptable, leading some organizations to revert to more conventional networking methods.

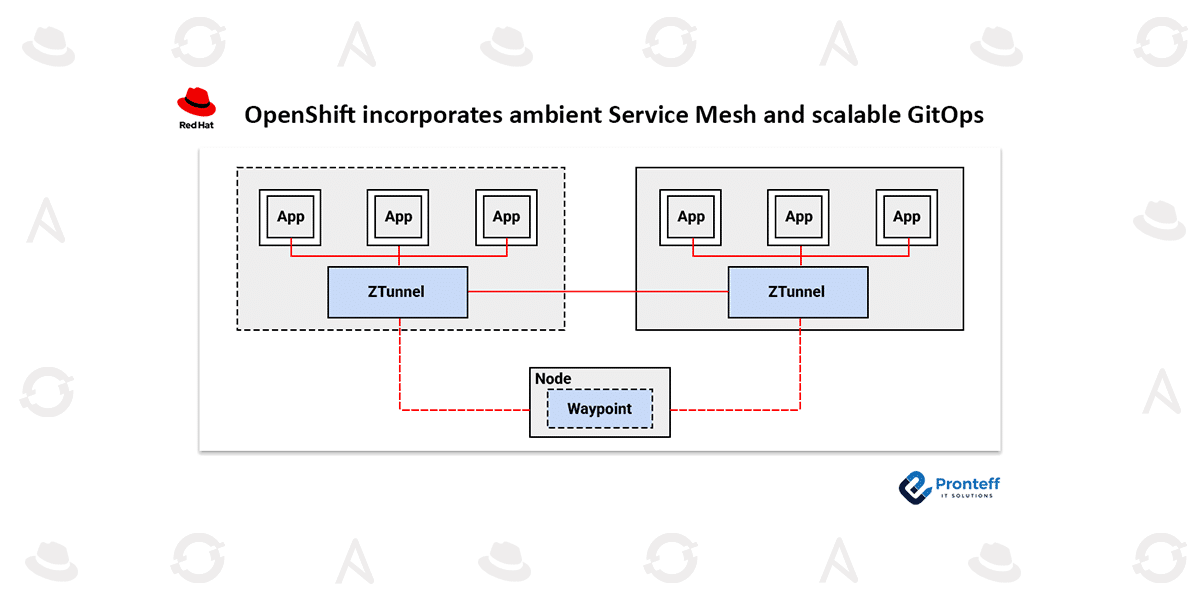

To address these challenges, the upcoming version of OpenShift Service Mesh introduces a redesigned architecture based on Istio’s ambient mode, now available as a technology preview. Ambient mode removes the need for sidecar proxies by shifting networking responsibilities away from application pods. Instead, it leverages node-level proxies and a secure overlay network to handle features such as mutual TLS encryption. Pod-to-pod communication is managed by a per-node zero trust proxy, known as ztunnel, which uses cryptographic identities to secure traffic and enable lightweight authentication and monitoring.

For more advanced capabilities, ambient mode introduces waypoint proxies—layer 7 (L7) components deployed as standard pods within the application’s namespace. These provide enhanced routing, authorization policies, and detailed observability. Waypoint proxies are designed to scale horizontally based on traffic demands, enabling a more efficient and scalable service mesh while minimizing resource usage.

This new architecture offers clear advantages for both developers and operations teams. Developers are relieved from having to modify their applications to work with mesh-specific sidecars or configurations, allowing them to focus purely on application logic. Meanwhile, platform and operations teams gain from streamlined upgrades, lower resource usage, and reduced complexity in managing the service mesh. With fewer proxies running across the cluster, the infrastructure becomes more efficient, enabling higher application density and better use of compute resources.

Enhancing GitOps at Scale with Argo CD Multicluster Control Plane

As organizations expand their use of Kubernetes, they often find themselves overseeing large numbers of clusters spread across on-premises data centers, public clouds, and edge locations. To manage this complexity, many have turned to GitOps—a methodology where the desired state of clusters is defined in Git repositories and automatically applied to Kubernetes environments. This approach brings consistency, traceability, and improved governance across deployments. However, traditional GitOps setups can face limitations when scaling to hundreds or even thousands of clusters, especially when dealing with diverse network conditions and restricted connectivity, making secure and efficient management a challenge.

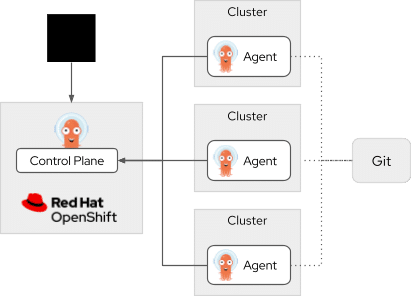

To overcome the challenges of scaling, securing, and operating GitOps in large cluster fleets, Red Hat has contributed a new agent-based architecture for Argo CD within the Argo community. This redesigned approach to GitOps at scale involves deploying lightweight agents within each target cluster. These agents retrieve the desired configuration from a centralized Argo CD control plane and apply changes locally, eliminating the need for the central server to directly manage or connect to every cluster. This model enhances scalability, reduces complexity, and improves security across distributed environments.

Diagram of the Argo CD Agent-Based Architecture

In this architecture, a Git repository is positioned on the right, with dotted lines indicating its role as the source of truth for configuration, connecting to three Kubernetes clusters in the center. Each of these clusters contains an Argo CD agent responsible for pulling and applying configurations locally. Solid lines link these clusters back to a central control plane cluster on the left, which runs OpenShift and hosts the main Argo CD instance. A user icon is shown with a solid arrow pointing to the control plane cluster, representing how users interact with the system through the centralized management interface.

This distributed approach boosts scalability and lowers resource usage while enhancing security by restricting permissions within each cluster. It also improves reliability, as agents can keep applying updates even when connectivity to the central control plane is intermittent. The Argo CD multicluster control plane and its agents are now available as a technology preview in Red Hat OpenShift GitOps, maintaining full compatibility with existing Argo CD installations.

With the Argo CD Agent, OpenShift delivers an advanced GitOps solution tailored for distributed environments, enabling seamless management for the evolving needs of hybrid cloud infrastructures.

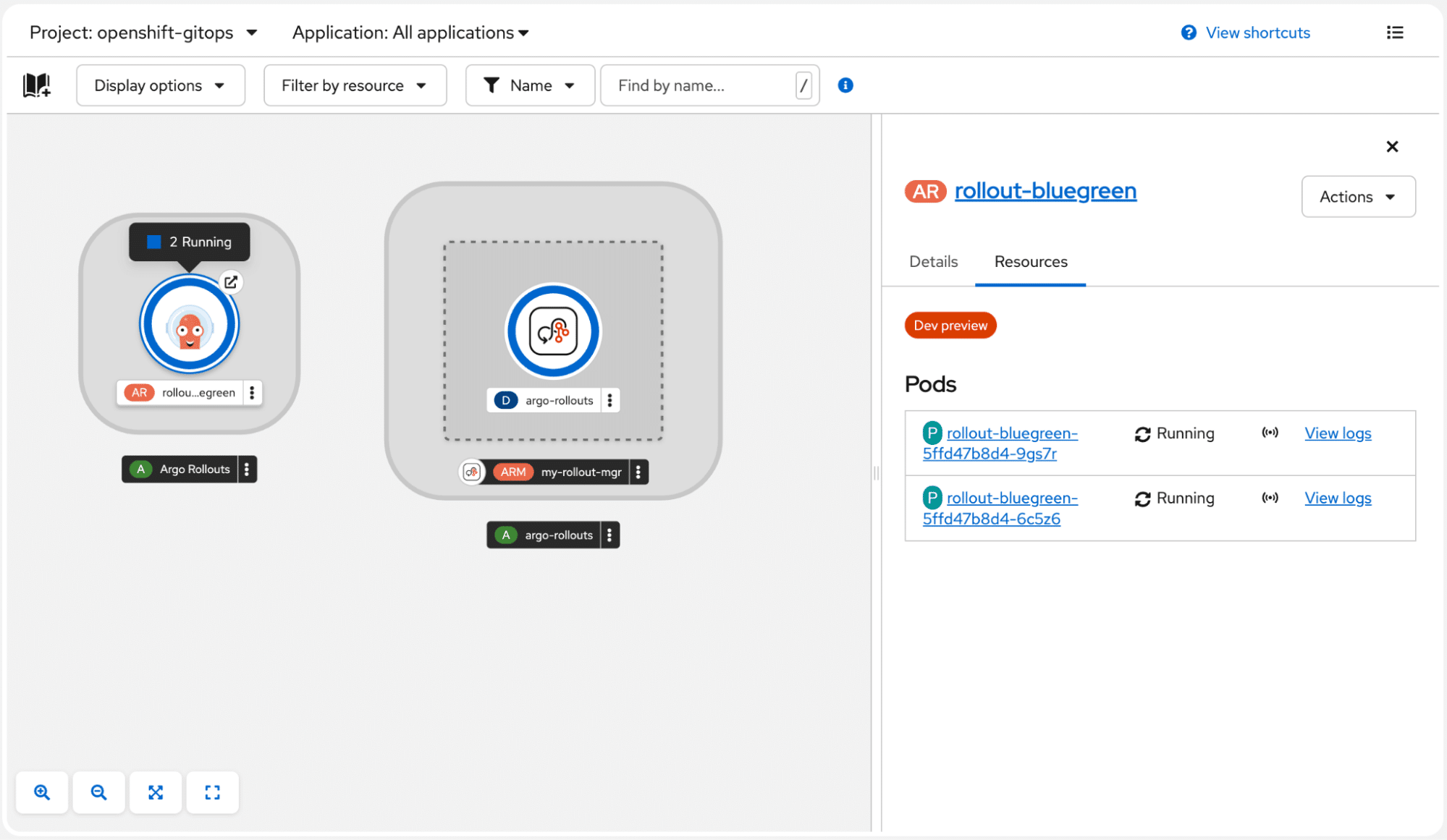

OpenShift GitOps includes Argo Rollouts to support progressive delivery and sophisticated deployment strategies like canary releases with automated monitoring and rollback capabilities. Visualization of rollouts is now built directly into the Argo CD dashboard and is also integrated into the topology view of the OpenShift web console.

This screenshot shows the updated Argo Rollouts page in the OpenShift console. On the left, a topology view illustrates an active blue/green rollout, while the panel on the right provides detailed information about the rollout and its resources, showing two pods currently in a running state.

As GitOps workflows gain widespread adoption, the importance of external secret managers has grown to ensure sensitive information isn’t stored or exposed in Git repositories. OpenShift supports this need through the Secret Store CSI Driver, which allows integration with popular secret management solutions. Now, we are launching External Secrets as a technology preview to expand compatibility and enhance GitOps workflows across a broader variety of workloads and scenarios.

Additionally, Red Hat OpenShift Pipelines—a Kubernetes-native, security-oriented CI/CD solution built on Tekton—now offers StepActions as a generally available feature. This enables teams to share and distribute reusable CI/CD components across Tekton Tasks, promoting consistency and standardization within organizations. The latest update also includes an improved pruner, allowing teams to define flexible pipeline cleanup policies based on factors such as time-to-live, execution count, successes, failures, and more, giving them precise control over the pruning process.