Securing AI safety while reducing enterprise cybersecurity risks

In this blog, we will learn how to secure AI safety and lower cybersecurity risks.

Artificial intelligence is advancing at an incredible pace, and organizations are rapidly combining AI with their enterprise data to improve employee productivity, enhance customer experiences, and create new business value. But as AI becomes more deeply embedded in enterprise systems, the data and infrastructure supporting it also become more appealing targets for attackers.

Generative AI, in particular, expands the potential attack surface dramatically. This makes it essential for organizations to examine where vulnerabilities may exist and how those weaknesses could affect operations. Deploying AI responsibly requires more than patching gaps; it demands a deliberate, organization-wide security strategy. Security must be integrated from the start—not added later.

AI Security vs. AI Safety: What’s the Difference?

Although the terms are often used together, AI security and AI safety address different areas of risk. Both are important, but each solves a separate problem.

AI Security

AI security is about keeping AI systems protected—ensuring their data, models, and infrastructure stay confidential, accurate, and available. Threats may include:

- Vulnerabilities in AI-related software

- Incorrect configurations or weak authentication expose sensitive data

- Prompt injection or attempts to bypass protections

- Unauthorized access that compromises model integrity

In many ways, AI security mirrors traditional IT security—but applied to new types of workloads and components.

AI Safety

AI safety focuses on whether AI systems behave responsibly and in line with organizational policies, regulations, and ethical expectations. Safety risks involve the outputs generated by the model, including:

- Offensive or harmful language

- Biased recommendations

- Hallucinated or fabricated information

- Misleading or unsafe advice

While a security failure compromises the system, a safety failure damages trust, introduces reputational risk, and may create ethical or legal issues.

Examples of Security and Safety Risks

Security Risks

- Memory vulnerabilities: For example, a heap overflow in a library influencing model outputs.

- Insecure setups: Exposed servers or tools lacking authentication.

- Broken authorization: Users gaining access with incorrect or overly broad tokens.

- Supply chain attacks: Injecting malicious code into an open-source model.

- Jailbreaking: Attempts to override model safeguards.

Safety Risks

- Bias: Outputs that discriminate based on personal characteristics.

- Hallucinations: Confident but incorrect information.

- Harmful responses: Suggestions that could physically or emotionally harm users.

- Toxic language: Answers containing offensive or abusive content.

The Emerging Risk Area: Understanding AI “Safety”

While strengthening security is essential, large language models introduce a new category of risk: safety. In AI, safety refers to ethical alignment, responsible outputs, fairness, and trustworthiness—not physical safety.

A security failure might expose customer data.

A safety failure might result in biased hiring suggestions or problematic chatbot responses.

Why do these failures occur? Because LLMs are trained to predict likely text—not to validate truth or ensure responsible behavior. Their non-deterministic nature also means responses can vary, even for identical prompts. This unpredictability creates challenges for governance.

Even well-trained models can generate harmful outputs. Fine-tuning can unintentionally remove safeguards or amplify risks.

Managing this requires modern tooling, such as:

- Safety-focused alignment during training

- Continuous testing and evaluation against safety benchmarks

- “Evaluation harnesses” that score the likelihood of risky outputs

In short, harmful outputs usually signal safety issues, not classic security breaches.

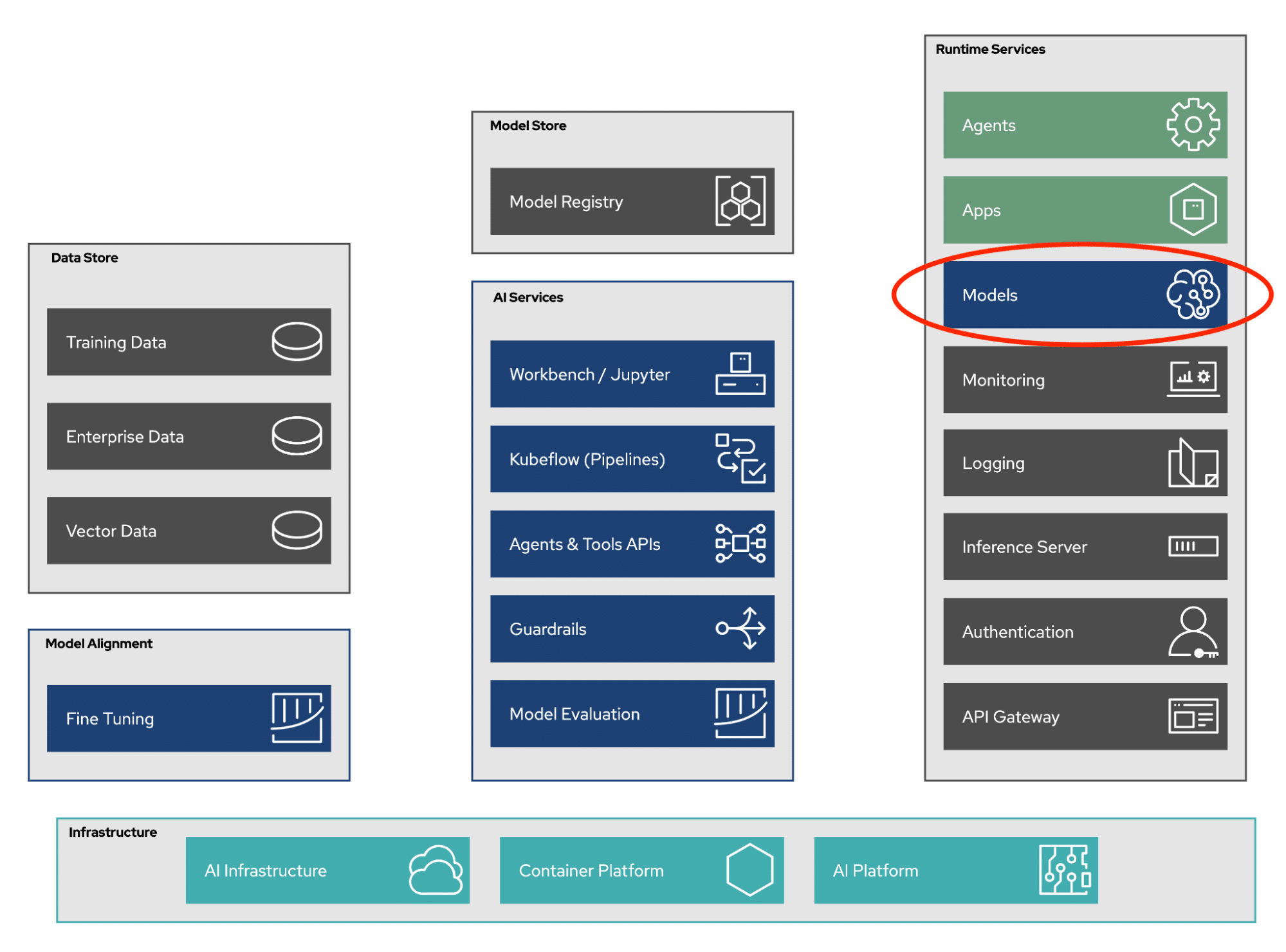

Looking Beyond the Model

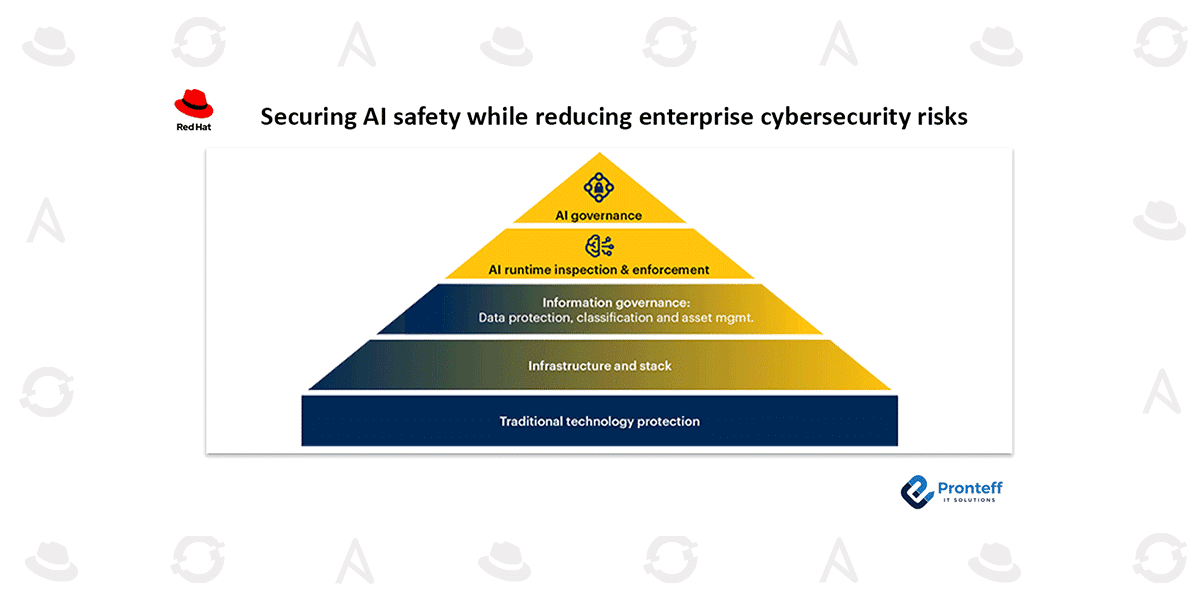

AI models often get the most attention, but they’re only part of the full architecture. Surrounding components—APIs, servers, storage, orchestration layers—make up much of the system and introduce their own risks.

A layered security approach is essential: strengthen your existing cybersecurity foundations, then add protections tailored to generative AI.

Connecting AI Security with Enterprise Cybersecurity

AI security doesn’t require discarding existing cybersecurity principles. The same foundations that protect enterprise systems today also form the backbone of secure AI deployments.

Effective security is risk management—reducing the likelihood of incidents and minimizing damage when they occur. This includes:

- Risk assessments for AI-specific threats

- Penetration tests to evaluate system resilience

- Continuous monitoring for anomalies and breaches

The classic Confidentiality, Integrity, Availability (CIA) principles still apply to AI workloads.

Organizations should extend proven practices such as:

- Secure SDLC: Embedding security throughout development.

- Supply chain security: Verifying all models, libraries, and containers.

- SIEM/SOAR integration: Tracking system behavior and detecting suspicious activity.

Instead of building standalone AI security silos, enterprises should incorporate AI risks into existing governance frameworks like an ISMS. This allows existing tools, teams, and processes to address both traditional and AI-specific threats.

The Path Forward

Enterprises should adopt a holistic strategy that unifies security, governance, compliance, and AI-specific safety measures. Frameworks such as Gartner’s AI TRiSM highlight the importance of integrated risk management across the full lifecycle of AI systems.

AI creates enormous opportunities, but it also introduces new and unfamiliar risks. By strengthening enterprise cybersecurity and adding dedicated safety controls, organizations can deploy AI confidently and responsibly.

Conclusion

AI security and AI safety are complementary areas of protection—one focused on defending systems from threats, the other on ensuring outputs remain responsible and aligned. Businesses that treat both as essential components of their broader risk management strategy will be better equipped to unlock AI’s full potential while maintaining trust with employees, customers, and regulators.