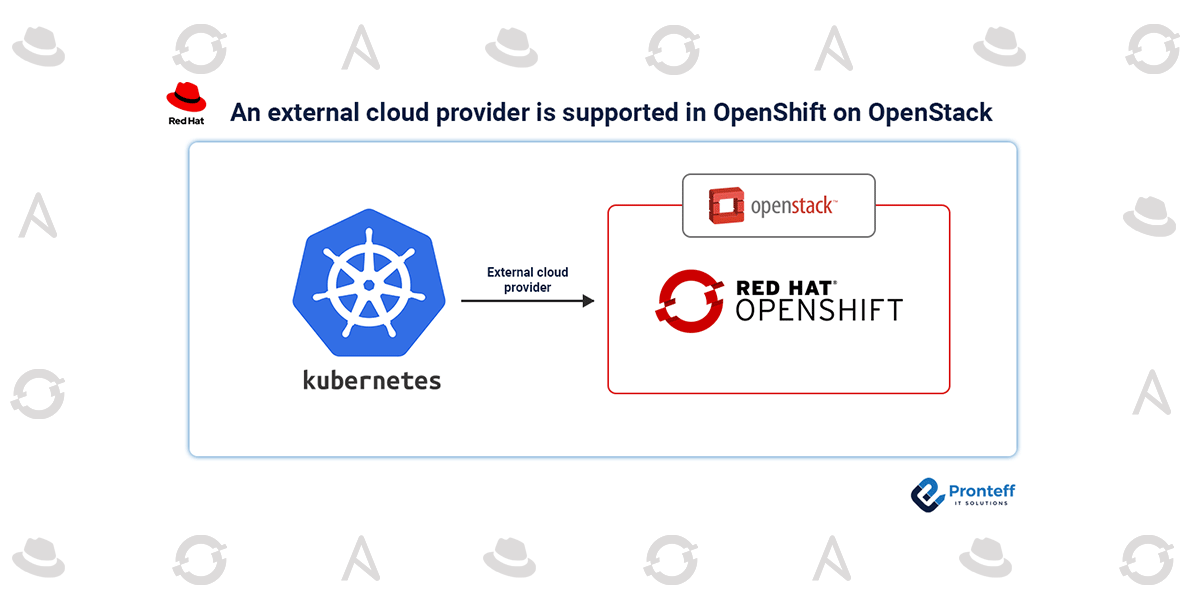

An external cloud provider is supported in OpenShift on OpenStack

What are external cloud providers?

In the past, the main Kubernetes repository had information about all cloud providers. Yet since Kubernetes wants to be everywhere, it must support a wide range of infrastructure providers. As it was determined that running everything from a single monolithic repository (and a single monolithic kube-controller-manager binary) wouldn’t scale, the Kubernetes community started developing support for out-of-tree cloud providers in 2017. As the functionality developed, the community decided to migrate all of the current in-tree cloud providers to external cloud providers as well. These out-of-tree providers were initially intended to allow the community to develop cloud providers for new, previously unsupported infrastructure providers. This endeavor resulted in the k8s.io/cloud-provider-openstack project for OpenStack clouds, while the k8s.io/cloud-provider-aws project was created for AWS integration and the k8s.io/cloud-provider-gcp project was created for Google Cloud Platform.

What are cloud providers for Kubernetes?

Kubernetes can be customized. You can address practically any use case by building on top of its inherent features. You can work with even the trickiest configurations by extending it.

Using cloud providers is a significant approach to improving and extending Kubernetes. By utilizing the APIs of your preferred cloud or infrastructure provider, Kubernetes cloud providers are extensions of Kubernetes that are in charge of managing the lifetime of Nodes as well as (optionally) Load Balancers and Networking Routes. In the case of the OpenStack cloud provider, this entails communicating with Neutron anytime we need a Networking Route, Octavia whenever we need a Load Balancer, and Nova whenever we need to verify the health and attributes of a Node.

But why should I care?

If this was the only change, splitting the cloud providers into independent packages and binaries, there wouldn’t be much to notice. The now-legacy in-tree cloud providers, however, were deprecated once the out-of-tree cloud providers were developed for each infrastructure provider, and development shifted to the out-of-tree providers. This meant two things. First, all deployments had to have transferred to the matching out-of-tree provider before the in-tree providers were eventually terminated because they had a finite lifespan and couldn’t get upgrades or bug fixes for their existing deployments. Second, and perhaps more interestingly, there is an expanding feature gap between the legacy in-tree providers and the out-of-tree providers as a result of the focus on the out-of-tree providers for all new development. There are significant features here for the OpenStack provider, such as support for using application credentials to authenticate with OpenStack services, the ability to use UDP load-balancers, and support for load-balancer health monitors to enable the Service routing of external traffic to node-local. We’re not just talking about one or two features either. No matter the platform, we want these functionalities for OpenShift users, thus it was our responsibility to figure out how to make this process as simple as possible.

Describing CCCMO

Fortunately, these cool things called Operators are widely used in OpenShift 4.x. They automate the setting up, configuring, and administration of Kubernetes-native application instances. Although there are other apps that employ the notion of operators, OpenShift’s operators are unique in that they are used to manage both the lifecycles of different OpenShift cluster components as well as the lifecycles of the applications that run on a cluster. OpenShift’s cluster Operators can be compared to the “actual” OpenShift installer, whereas openshift-installer is a utility that creates the necessary scaffolding for the individual Operators to carry out their respective tasks. In the OpenShift documentation, you can read more about Operators. One such cluster Operator is the cloud-controller-manager-operator (CCCMO), which is in charge of overseeing the lifespan of the many external cloud providers. Particularly, the Cloud Controller Manager binaries that each cloud provider makes available. When using OpenStack clouds, CCCMO is now partially supported by OpenShift as of the 4.9 release, and it is enabled by default as of the 4.12 release.

What makes CCCMO so magical?

The user-editable configuration map called cloud-provider-config in the openshift-config namespace must be changed to a new, non-user editable configuration map named cloud-conf in the openshift-cloud-controller-manager namespace for CCM to function as intended when enabled in an upgrade from OpenShift 4.11 to 4.12. From version 4.11 onward, CCCMO manages that migration using cloud-specific transformers that take away options that are no longer pertinent to CCM and add new options to the new config map. The following is a list of adjusted configuration options:

- [Global] secret-name, [Global] secret-namespace, and [Global] kubeconfig-path are dropped, since this information is contained in the clouds.yaml

- [Global] use-clouds, [Global] clouds-file, and [Global] cloud are added, ensuring the clouds.yaml file is used for sourcing OpenStack credentials.

- The entire [BlockStorage] section is removed, as all storage related actions are now handled by the CSI drivers.

- The [LoadBalancer] use-octavia option is always set to True and, while the [LoadBalancer] enabled option is set to False if Kuryr is present.

As the cluster’s essential functionality is being shifted across components during an upgrade, the sequencing of operations is equally important. The CCCMO makes sure that cloud-dependent components are upgraded in the proper order and that the upgrade doesn’t start until the earlier stages have been finished and verified.

How can I make my cluster’s External Cloud Provider active?

Simple solution: just upgrade! Nothing further is needed to enable the external OpenStack cloud provider if you already have an OpenShift cluster running on OpenStack 4.12. We advise checking the following if you wish to examine a cluster and make sure the external cloud provider has been enabled:

- Availability of openstack-cloud-controller-manager pods

$ oc get pods -n openshift-cloud-controller-manager NAME READY STATUS openstack-cloud-controller-manager-769dc7b785-mgppt 1/1 Running openstack-cloud-controller-manager-769dc7b785-n7nsj 1/1 Running

- The cloud controller will no longer be owned by the kube-controller-manager. Instead, the external OpenStack cloud provider will be in charge of managing this. Examining the config config map in the openshift-kube-controller-manager namespace will allow you to confirm this. A cloud-provider entry with the value external should be present here.

$ oc get configmap config -n openshift-kube-controller-manager -o yaml |grep cloud-provider

- A cloud-provider value set to external for kubelet should be present in the MachineConfig of each master and worker.

$ oc get MachineConfig 01-master-kubelet -o yaml | grep external --cloud-provider=external \ $ oc get MachineConfig 01-worker-kubelet -o yaml | grep external --cloud-provider=external \

Conclusion

You may anticipate the other infrastructure providers that OpenShift supports to follow suit, OpenStack’s now that it has made the leap. These systems will likely have issues similar to those OpenStack did, including the transfer of various configuration schemas, handling of dissimilar feature sets, and behavior inspection during upgrades. The solutions will also change.

This switch, meanwhile, hasn’t merely made it possible for other outside cloud service providers to follow suit. Also, it has made sure that we are currently utilizing a cloud provider that offers more thorough and comprehensive control of cloud resources, such as nodes and load balancers.