A new version of Red Hat OpenShift is now available

Here in this blog, we will learn about a new version of Red Hat OpenShift

Furthermore, in the upcoming weeks, hosted control planes with OpenShift Virtualization will be widely accessible. This allows you to use the same underlying base OpenShift cluster to run both hosted control planes and virtual machines created with OpenShift Virtualization. By putting several hosted control planes and hosted clusters into the same underlying bare metal infrastructure, hosted control planes and OpenShift Virtualization enable you to run and manage virtual machine workloads alongside container workloads and benefit from more effective resource utilization.

We previously declared that Red Hat OpenShift Extended Update Support (EUS) releases, starting with Red Hat OpenShift 4.12 and continuing with all ensuing even-numbered releases, would be supported for 24 months for OpenShift deployments that use x86_64 architecture. The 24-month Extended Update Support (EUS) is extended to the 64-bit ARM, IBM Power (ppc64le), and IBM Z (s390x) platforms starting with OpenShift Container Platform 4.14. It will continue on all upcoming even-numbered releases after that. OpenShift Life Cycle and OpenShift EUS Overview have more details about Red Hat OpenShift EUS.

Safeguard your Workloads and Infrastructure

Red Hat OpenShift 4.14 introduces fresh security features and improvements to further strengthen safe application development throughout the hybrid cloud:

- Now, OpenShift namespaces can share secrets and configuration maps thanks to the OpenShift Shared Resource CSI Driver. As images are being built, this makes it easier for you to grant entitlements to Red Hat products.

- Now, you can apply your current AWS security groups to your control plane and compute machines in your own OpenShift cluster on AWS, combining them with your current Amazon Virtual Private Cloud (VPC).

- You can now create and manage OpenShift clusters with temporary, limited privilege credentials for self-managed OpenShift on Azure. To securely access Azure cloud resources, use workload identities from Azure Active Directory (AD) in combination with Azure managed identities for Azure resources. For more information, see Setting up an Azure cluster to use temporary credentials.

- Installing a cluster on Google Cloud Platform (GCP) using Confidential VMs is now widely available for private cloud computing. Confidential VMs on Azure is another option for installing a cluster on Azure; it’s available for Technology Preview.

- The general public can now purchase Ingress Node Firewall Operator.

- We are pleased to present the Technology Preview for the Secrets Store CSI (SSCSI) Driver Operator. Customers frequently utilize AWS Secrets Manager, AWS Systems Manager Parameter Store, and Azure Key Vault, among other secret storage systems, to safely manage application secrets. These systems are integrated with the SSCSI Driver Operator. The target market for this operator is security-conscious clients who need sensitive information and secrets to be handled in a centralized secrets management system that complies with their legal needs for encryption and access. Customers must pull secrets in order to use this solution, which is normally managed outside of OpenShift clusters. In order to prevent the volume and secret content from being preserved in the cluster after the pod is deleted, the SSCSI driver mounts external secrets in ephemeral in-line volumes to the application pod. In order to integrate with the external secret stores, the SSCSI Driver requires an SSCSI Provider. We anticipate collaborating with our partners to deliver the end-to-end solution in upcoming releases.

- Security context constraints (SCCs) are a useful tool for managing permissions among the pods in your cluster. When you install Operators or other OpenShift platform components, default SCCs are also created during the installation process. Using the OpenShift CLI (oc), cluster administrators can build their own SCCs. But occasionally, these customized SCCs can result in preemption problems that cause the following outcomes:

- Changes made to SCCs that come out of the box may result in core workload malfunctions.

- Core workloads may malfunction when new, higher priority SCCs are added and take precedence over already-pinned,out-of-the-box SCCs during SCC admission.

We are pleased to present Red Hat OpenShift 4.14’s SCC Preemption Prevention feature, which allows you to bind an SCC explicitly to your workloads. Set the openshift.io/required-scc annotation on your workload to accomplish this. Any resource, like a deployment or daemon set, that has the ability to set a pod manifest template should have this annotation set.

- Safe token use with OC CLI – It is not secure to paste the access token on the command line using the OC login –token command when using OC login with an access token. The token can be accessed by using the “ps” command and is logged in with Bash history. Moreover, any system administrator can search the token. We’ve added the oc login –web in this release to provide a secure option for CLI-based logins to a user’s OpenID Connect (OIDC) identity provider. With the help of this command, the user opens a web browser and safely logs into their OIDC identity provider. Following a successful login, the user can access the OpenShift APIs further by using the oc commands.

- Red Hat OpenShift 4 users can now download the Security Technical Implementation Guide (STIG) from the U.S. Defense Information Systems Agency (DISA STIG). Furthermore, the Compliance Operator comes with the most recent CIS 1.4 benchmark for OpenShift in addition to the DISA-STIG profile.

- Red Hat Advanced Cluster Security Cloud Service, which comes with a free 60-day trial, can be used to safeguard your Red Hat OpenShift environments and Kubernetes services across all major cloud providers, including Amazon Elastic Kubernetes Service (EKS), Microsoft Azure Kubernetes Service (AKS), and Google Kubernetes Engine (GKE). A fully managed software-as-a-service, Red Hat Advanced Cluster Security Cloud Service safeguards Kubernetes and containerized applications during the entire application lifecycle, including build, deploy, and runtime.

Red Hat OpenShift Virtualization in AWS and ROSA can help you modernize your virtual machines.

Red Hat OpenShift Service on AWS (ROSA) and Red Hat OpenShift Virtualization on AWS are now supported. With the Migration Toolkit for Virtualization, you can move your virtual machines (VMs) to AWS or ROSA and run them alongside containers, streamlining management and speeding up time to production. This enables you to benefit from the ease of use and speed of a contemporary application platform while maintaining your current virtualization investments.

Better Scaling and Stability through Optimizations of Open Virtual Networks (OVN)

In order to remove obstacles to the networking control plane’s scale and performance, Red Hat OpenShift 4.14 introduces an optimization of Open Virtual Network Kubernetes (OVN-K), wherein its internal architecture was changed to reduce operational latency. Currently, network flow data is localized on each cluster node rather than being centralized in a large control plane. Because of the decreased operational latency and cluster-wide traffic between the worker and control nodes, cluster networking can optimize larger clusters by scaling linearly with the number of nodes. A major cause of instability is eliminated since RAFT leader election of control plane nodes is no longer required because network flow is localized on each node. Localized network flow data also has the advantage of limiting the impact of a single node failure to that node alone, meaning that it does not affect the cluster’s networking in any way, making the cluster more resilient to failure scenarios. Refer to the OVN-Kubernetes architecture for additional details.

Increase Workloads for AI and Graphics with Red Hat OpenShift, NVIDIA L40S, and NVIDIA H100

Through the provision of an end-to-end enterprise platform tailored for AI workloads, Red Hat and NVIDIA are collaborating to unleash the potential of AI for all businesses. We are also happy to announce that Red Hat OpenShift supports the NVIDIA L40S and NVIDIA H100 GPU accelerators throughout the hybrid cloud. Red Hat OpenShift and NVIDIA work together to power workloads in the next generation of data centers, including intelligent chatbots, graphics, and video processing applications, as well as generative AI and training and inference of large language models (LLMs).

Automatic Migration of VMware vSphere Container Storage Interface (CSI)

After upgrading to Red Hat OpenShift 4.14, clusters running OpenShift on VMware vSphere that were first installed in Red Hat OpenShift 4.12 or earlier will need to go through the vSphere CSI migration. The migration of vSphere CSI is smooth and automatic. The use of all current API objects, including storage classes, persistent volumes, and persistent volume claims, is unaffected by migration.

Red Hat OpenShift’s sustainability in the cloud

Effective resource management in Kubernetes environments depends on tracking and optimizing power consumption. OpenShift 4.14 includes the Red Hat OpenShift power monitoring Developer Preview in order to meet this need. Kepler is an Efficient Power Level Exporter based on Kubernetes, and power monitoring is its product realization. Granular power consumption for Pods, Namespaces, and Nodes is computed and exported using eBPF. It is available at the OperatorHub on OpenShift. It offers information derived from Intel’s Advanced Configuration and Power Interface (ACPI) or Running Average Power Limit (RAPL), as well as the option to include pre-trained models for configurations where this data is unavailable. Kepler uses this model to estimate workload energy consumption in private data centers and public clouds, which helps with resource planning and optimization in the future.

Across the Hybrid Cloud, OpenShift

Red Hat Advanced Cluster Management (RHACM) running in AWS, Azure, and Google Cloud now allows you to centrally deploy and manage on-premises bare metal clusters. By extending the functionality of your central management interface, this hybrid cloud solution allows you to deliver bare metal clusters into environments that are restricted. Furthermore, RHACM offers an enhanced user interface for OpenShift deployment in Nutanix, broadening the scope of collaborations that offer metal infrastructure where it is required.

Red Hat OpenShift Data Foundation (ODF) 4.14 introduces general availability for regional disaster recovery (DR) for Red Hat OpenShift workloads, enhancing resiliency across the hybrid cloud. When combined with RHACM, we can handle stateful workload business continuity requirements and let administrators offer DR solutions for geographically dispersed clusters. For mission-critical workloads, the appropriate Recovery Point Objective (RPO) and Recovery Time Objective (RTO) can be achieved by configuring asynchronous replication at the application level of granularity.

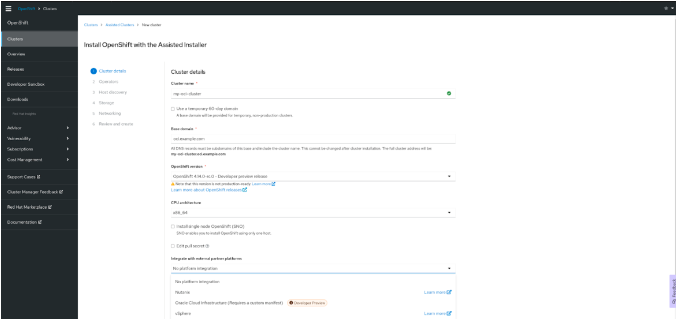

We’re expanding OpenShift’s compatibility with Oracle Cloud Infrastructure, which is currently in Developer Preview. Using the Agent-based Installer for restricted network deployments or the Assisted Installer for connected deployments, you can install OpenShift on Oracle Cloud Infrastructure. Fill out this form to request access so you can test OpenShift on Oracle Cloud Infrastructure.

Red Hat Device Edge uses MicroShift 4.14, which is included in this release. MicroShift makes far-edge deployments possible. No matter where devices are placed in the field, it offers lightweight, enterprise-ready Kubernetes container orchestration that extends operational consistency across edge and hybrid cloud environments. Supporting various use cases and workloads on tiny, resource-constrained devices at the farthest edge requires being lightweight. As a logical extension of Kubernetes environments, it strikes a balance between the effectiveness of low resource utilization and the well-known tools and procedures that Kubernetes users are already accustomed to. Assembling lines, Internet of Things gateways, informational kiosks, and car systems are a few examples of use cases where limited power, cooling, computing capacity, and connectivity are required for operations.