How to improve security by leveraging containers on quay.io?

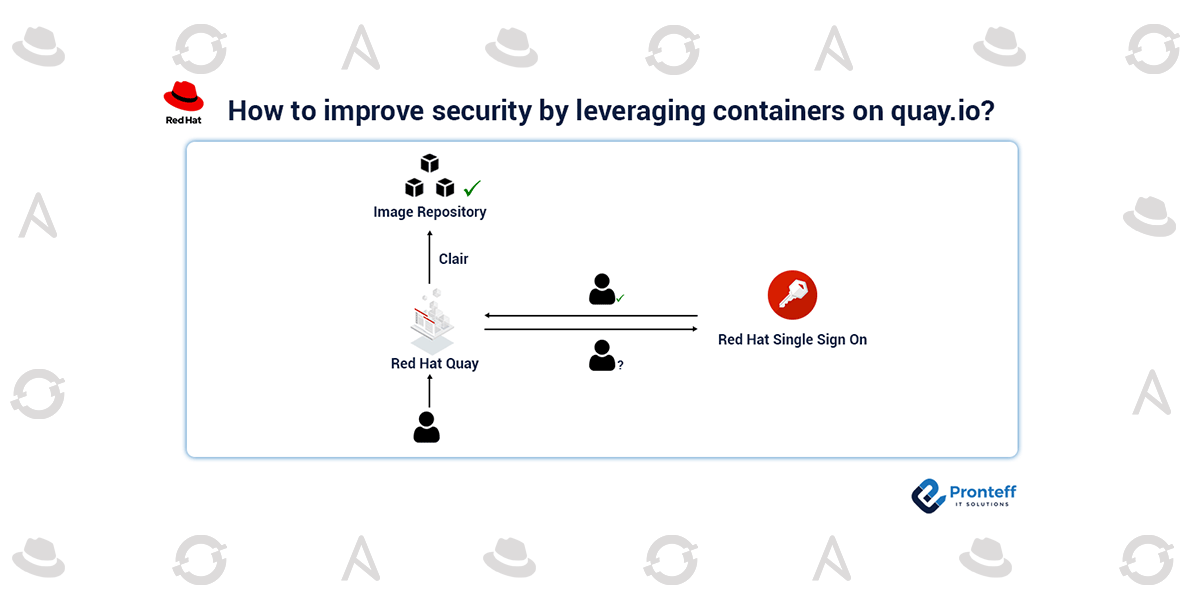

Clair is a static analyzer that is used to index the information in container images. Based on what it discovers, Clair then provides vulnerability matching. In an effort to give customers a more uniform view of their security vulnerabilities, Clair is utilized in a variety of other Red Hat products in addition to being bundled with Red Hat Quay and Quay.io. Clair is used not just by Red Hat employees but also by numerous outside teams, and we are constantly supported by a strong open-source community.

Our primary focus is on container image layers, where we look for hints about the kind of system the layer’s file system is describing and produce a report that enables users to quickly see the installed software the container contains and any vulnerabilities that software may be subject to.

The problem

Despite the fact that we can keep testing Clair’s interpretations of well-known images until the cows come home, containers in the wild can be constructed in several ways and serve as the basis for numerous additional containers. As a result, we are left with an issue with loosely bounded inputs, where the possible inputs we can encounter are boundlessly chaotic and beyond the scope of our testing. How can we resolve these issues before Clair is delivered to customers?

The Solution

In our CI pipeline, we can’t run Clair against every known container image, but we can operate it first. This approach at Red Hat entails maintaining a high-traffic service with the most recent code updates to enable you to weed out any bugs and corner cases. By doing this, you can be sure that your customers’ instances are stable and fit for purpose when you stamp a release and they begin to upgrade. As was previously mentioned, Quay.io’s vulnerability results are powered by Clair. Approximately 10 million container pictures are stored on Quay.io (the vast majority of which are multi-layered). This corpus is frequently re-indexed by Clair as Clair scanners develop or are added, as we need to update the bill of materials for each container. We can find problematic layers by looking for error patterns and digging further into the logs. After analyzing the error patterns, we either analyze the most frequent errors directly if the manifest is available to the public or attempt to construct problematic layers using the contextual data in the logs. Occasionally, this requires redeploying with more logging.

As soon as we have a layer, we can use it as test data and create regression tests using it. Then, we can deploy a fix and validate it while working on fixes to address the edge case. The amount of time and goodwill this saves us with clients is enormous. The identification is quick and the feedback after deployment is rapid. We know we have a battle-tested release that is suitable for various production environments once we have verified the code is stable in staging and production environments.

Operating first also means that once we’ve dealt with maintaining the service at a sizeable enough scale, we can begin to accurately tell clients of their own environments’ high-traffic requirements for storage, memory, and CPU. Not only that, but we can predict problems that can occur in our customers’ settings and provide them with precise expectations for offline procedures like data transfers.

FIRST EXAMPLE: PROBLEMATIC LAYERS IN THE WILD

Layers are used to create container pictures. A tar file’s layers are just a collection of files. To enable the various scanners to find the necessary files, we must first develop a representation of each layer’s file system before we can examine a container image layer by layer (a package DB, a jar file, etc). Building an addressable representation of a file system from files in the archive shouldn’t be that challenging as layers are just tar files, right? Yes, that’s how it works 95% of the time. But in the wild, 5% of the layers are responsible for 100% of the headaches.

The Go package tarfs, which implements Go’s fs, is included in Clair. Over a tar archive, FS interface. In this instance, it is utilized to communicate with the layer file. This fs most of the time. When it works, FS representation is simple to construct and use.

Some container image layers combine two tars into one layer, for example, and are built in the darkest corners of the internet. That again doesn’t seem too absurd, but think about this:

├── tar_1 │ ├── run │ │ └── logs │ │ └── log.txt │ └── var │ └── run -> ../run ├── tar_2 │ └── var │ └── run │ └── console │ └── algo.txt

In the second tar, the directory var/run is declared as both a symbolic link to run (quite standard) and as a regular directory. Does this imply that log.txt should be accessed through var/run/logs/log.txt and algo.txt through run/console/algo.txt? We came to the conclusion that the answer is yes, although as you might anticipate, we had not given this any thought before discovering it in the wild—apparently among CentOS basic layers. As Quay tried to index the problematic manifests more often, the issue became more frequent until it eventually triggered the 5XX error rate alarm.

This is only one example of a potentially problematic layer that we have encountered; in building systems where documented behavior differs from actual behavior, we can also find issues. This recently occurred with early RHEL 9 layers and the RHEL repository scanner. The embedded content-set files are placed at root/buildinfo/content manifests/, and Clair will attempt to parse CPE information from them. However, the required array wasn’t supplied, resulting in an error, and our secondary detection technique (using the Red Hat Container API) wasn’t activated.

SECOND EXAMPLE: OPERATIONAL CONCERNS

We have recently been successful in validating operational improvements in our production environment, away from challenging layers. We are constantly searching for methods that Clair can coexist peacefully with constrained memory, CPU, and storage because our customers’ environments can be so varied and they frequently operate under stringent scaling limits. We were able to determine we had a sizable underutilized database index by examining index utilization in production, which is part of our effort to prevent database bloat. Once the migration was discovered, we could apply it to our staging environment to verify that it was applied as intended and that latency was unaffected before applying it to production.

Conclusion

We constantly release new code, which may have defects, and index strange new containers. However, what matters to us is that our software has previously been examined, tested, and forged in production before we distribute it to consumers.

Of course, such a strategy has drawbacks that shouldn’t be overlooked. Once you have meaningful traffic and data, then the issues such as on-call scheduling, more release equipment, infrastructure costs, SLAs, etc. begin. As a result, not every company will be able to Operate First with a SaaS deployment, but teams should think about other approaches to encourage usage, such as internal instances, hosted sandboxes, Github actions, etc.

For us, tackling the unbounded input problem and being able to execute consequential migrations while maintaining the Operate First approach has not only unlocked new advantages for our way of working but also enabled us to head off problems before they arise.