Red Hat Advanced Cluster Management Application Pull Controller

Here in this blog, we will learn about Red hat Cluster Management Application Pull Controller.

Dependencies and architecture

ManifestWork objects are created by this Argo CD pull model controller on the hub cluster, which wraps Application objects as payload. For additional information on ManifestWork, a key concept for assigning workloads to the managed clusters.

The Application is pulled from the hub cluster when the RHACM agent on the managed cluster detects the ManifestWork there.

Required conditions:

- A RHACM 2.8 instance in operation

- In RHACM, one or more managed cluster(s)

Configuring the pull model

- Install the OpenShift GitOps operator (version 1.9.0 or higher) in the installed namespace openshift-gitops on the hub cluster and all target managed clusters.

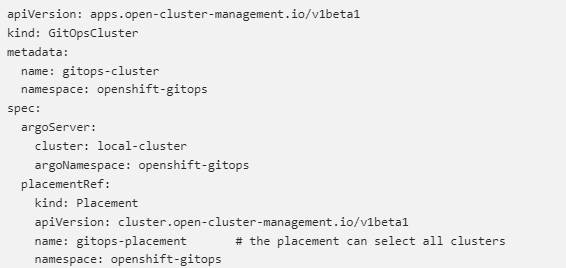

- Make a GitOpsCluster resource with a placement resource referenced in it. Each and every managed cluster required to support the pull model is chosen by the placement resource. Consequently, the Argo CD server namespace contains managed cluster secrets. A cluster secret must be present in the Argo CD server namespace on the hub cluster for each managed cluster. To spread the Argo CD application template for a managed cluster, the Argo CD application set controller needs this. Take a look at this instance:

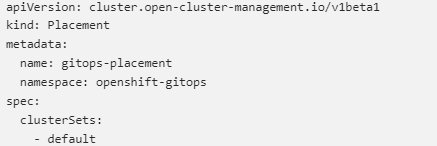

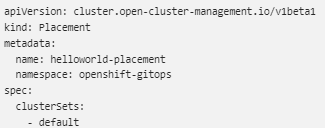

- For the GitOpsCluster resource that was created in step 2, create a Placement resource. Assuming that every target managed cluster is added to the default clusterset, the following example can be used:

Implementing a pull model program

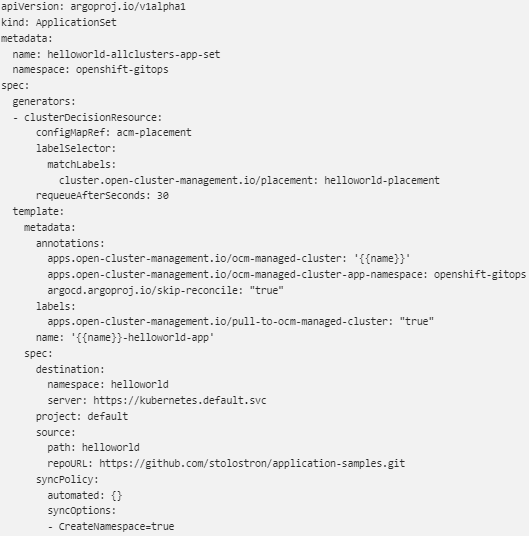

Applications are deployed on the managed clusters using either the push or pull model through the Argo CD ApplicationSet CRD. In the generator field, it makes use of a Placement resource to obtain a list of managed clusters. The template field allows specifications for the application to be substituted as parameters. Every target cluster’s application is created under the supervision of the Argo CD ApplicationSet controller on the hub cluster.

Since the application is deployed locally by the application controller on the managed cluster, the pull model requires the destination for the application to be the default local Kubernetes server (https://kubernetes.default.svc). Unless the annotations apps.open-cluster-management.io/ocm-managed-cluster and apps.open-cluster-management.io/pull-to-ocm-managed-cluster are added to the template section of the ApplicationSet, the push model is used by default to deploy the application.

It is crucial that the Argo CD application controllers disregard these application resources on the hub cluster when deploying applications via the pull model. The intended workaround is to include the argocd.argoproj.io/skip-reconcile annotation in the ApplicationSet’s template section. Argo CD Applications are only processed on managed clusters, supporting a pull model, whereas an ApplicationSet is processed on the hub to enable the dynamic placement of applications on managed clusters using clusterDecisionResource.

An example ApplicationSet YAML that employs the pull model is as follows:

The ApplicationSet above references the following Sample Placement YAML:

Architecture of controllers

On the hub cluster, the ApplicationSet resources are being observed by two sets of controllers:

- The current application controllers for Argo CD

- The recently developed propagation controller

Which controller reconciles to deploy the application is determined by annotations in the application resource. Any applications with the argocd.argoproj.io/skip-reconcile annotation are ignored by the Argo CD application controllers used in the push model. Reconciling only on applications with the apps.open-cluster-management.io/ocm-managed-cluster annotation, the propagation controller supports the pull model.

To get the application to the managed cluster, it creates a ManifestWork.

The value of the ocm-managed-cluster annotation designates the managed cluster.

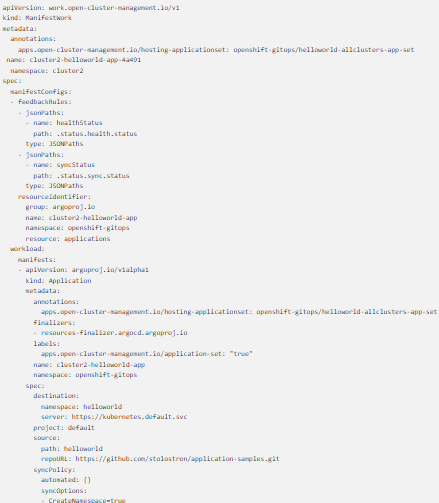

A sample ManifestWork YAML created by the propagation controller on the managed cluster cluster2 to create the helloworld application looks like this:

The Argo CD application’s status and the health status are synchronized with the manifestwork’s statusFeedback due to the feedback rules outlined in ManifestConfigs.

Flow for managed cluster application deployment

The local Argo CD controllers reconcile to deploy the Argo CD application after it is created on the managed cluster using ManifestWorks. The controllers use the following steps to deploy the application:

- Connect to the designated Git/Helm repository and pull resources from it.

- Install the resources on the cluster that is managed locally.

- Create the application status for Argo CD.

- Multicluster Application Report: a compilation of the managed clusters’ application statuses

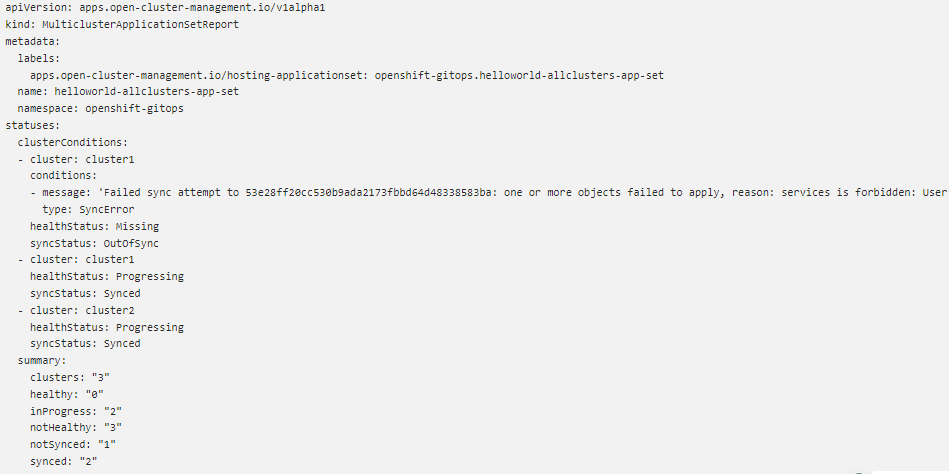

To give an aggregate status of the ApplicationSet on the hub cluster, a new multicluster ApplicationSet report called CRD is introduced. Only those who are deployed using the new pull model are included in the report. It contains each managed cluster’s resource list and the application’s current state overall. For every Argo CD ApplicationSet resource, a distinct multicluster ApplicationSet report resource is generated. The ApplicationSet and the report are created in the same namespace. Contains the MulticlusterApplicationSetReport:

- A resource list for the Argo CD program

- Overall synchronization and state of health for each managed cluster’s Argo CD application

- Each cluster that has an unhealthy or out-of-synch overall status will have an error message.

- An overview of the overall application status across all clusters under management

The resource sync controller and the aggregation controller are two new controllers that have been added to the hub cluster in order to facilitate the creation of the MulticlusterApplicationSetReport.

Every ten seconds, the resource sync controller queries each managed cluster’s RHACM search V2 component to obtain the resource list and any error messages specific to each Argo CD application.

The aggregation controller will then use the intermediate reports it creates for each application set to create the final MulticlusterApplicationSetReport report.

Every ten seconds, the aggregation controller also runs and adds the application’s health and sync status on each managed cluster based on the report that the resource sync controller generated. Every application’s status can be obtained from the statusFeedback located in the application’s ManifestWork. The final MulticlusterApplicationSetReport is saved with the same name as the ApplicationSet in the same namespace as the Argo CD ApplicationSet after the aggregation is finished.

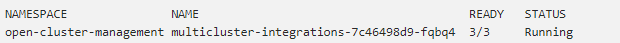

The propagation controller, the two new controllers, and the multicluster-integrations pod are all run in different containers, as the following example illustrates:

A sample MulticlusterApplicationSetReport YAML for the helloworld ApplicationSet is provided below.

Actually deployed on the managed cluster(s) are all the resources mentioned in the MulticlusterApplicationSetReport. A resource will not be listed in the resource list if it is not deployed. The error message would, however, explain why the resource could not be deployed.

RHACM policies versus the pull model

The comprehensive RHACM policies framework can be used to automate tasks on managed clusters in addition to strengthening clusters security thanks to its potent templating feature. It is outside the purview of this article to discuss RHACM policies. This section will only touch on the differences between each method and how policies can also be used to achieve the pull model.

Every time a new managed cluster is created or imported in RHACM, users can automate the OpenShift Gitops operator installation process by utilizing the policy templating feature. Users can also deliver an Argo CD Application resource to the managed cluster as part of this automation process; in the pull model, this is accomplished by the propagation controller. For someone like a Solution Architect, all of these steps make perfect sense, but they are very intimidating for first-time RHACM users. In addition to understanding how the Gitops operator operates, new users also need to understand how the policy generator and templating engine function. New users only need to understand the ideas presented in this article in order to use the pull model.

Using policies also has the drawback of dispersing the application deploy results over all target managed clusters. It would be difficult for users who are limited to the CLI to compile all of the results from each cluster. Users of the RHACM console can view the Argo CD applications on the applications page, but in order to access more specific information, they must click on each application separately. Users of the CLI find it easier because the MulticlusterApplicationSetReport resource in the pull model consolidates all the results in one location. Users will be able to view detailed information for all deployed applications across the managed clusters on a single page by clicking the ApplicationSet on the applications page when console support for the pull model is added (scheduled for RHACM 2.9).

Restrictions

- It is only on the managed cluster(s) that resources are deployed.

- The MulticlusterApplicationSetReport will not include a resource that was not successful in being deployed.

- The local cluster is not included as a target managed clusters in the pull model.

- In certain use cases, it may not be possible for the managed clusters to reach the GitServer. A push model would be the only option in this situation.

In conclusion

The setup of an Argo CD Application Pull Model environment and the deployment of a pull model application were covered in this article. Users can deploy applications more effectively in environments where centralized clusters are difficult to reach but remote clusters are able to communicate with the centralized cluster by using the pull model.