Scaling OpenShift with the new OVN Kubernetes architecture

Here in this blog, we will learn about Scaling OpenShift with the new OVN Kubernetes architecture.

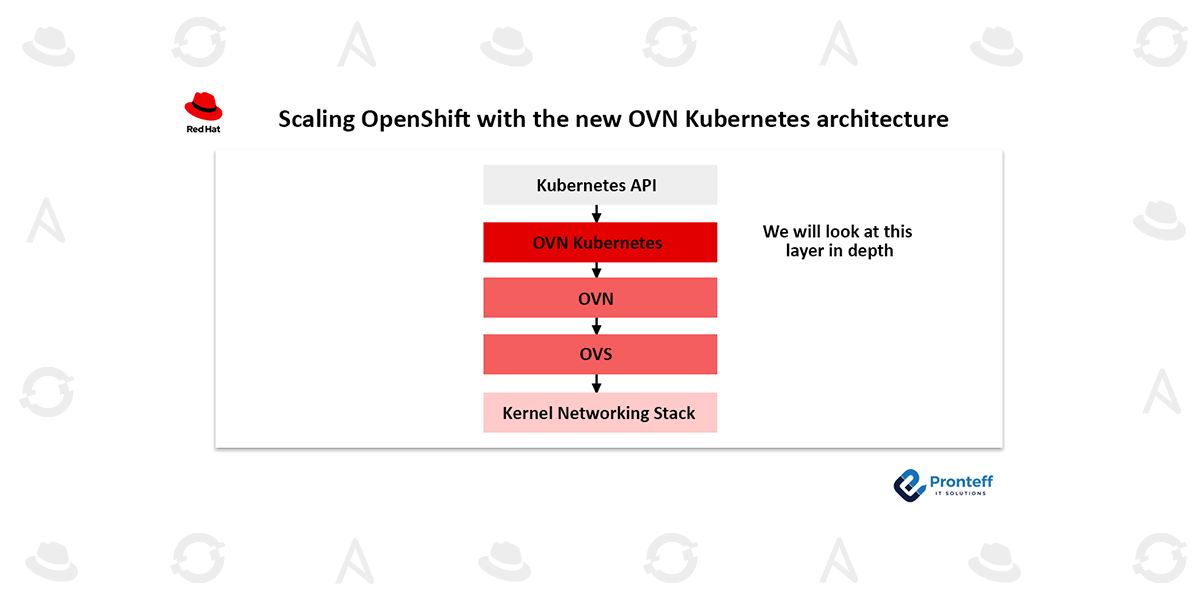

With Open vSwitch (Open Virtual Switch) and OVN (Open Virtual Networking) at its heart, OVN Kubernetes (Open Virtual Networking – Kubernetes) is an open-source project that offers a reliable networking solution for Kubernetes clusters. This plugin complies with Kubernetes networking standards and is written in accordance with the CNI (Container Network Interface) guidelines. With the 4.12 release, Red Hat OpenShift Networking’s default CNI plugin is OVN Kubernetes.

The Kubernetes API is monitored by the OVN Kubernetes plugin. It creates and configures the appropriate OVN logical constructs in the OVN database for the generated Kubernetes cluster events. Networking on a Kubernetes cluster is made possible by OVN, an abstraction built on top of Open vSwitch, which programs the OpenFlow flows on the node and transforms these logical constructs into logical flows in its database.

OVN Kubernetes offers several essential features and functionalities, such as:

- establishes pod networking, which includes the pod’s virtual ethernet (veth) interface and IP Address Management (IPAM) allocation.

- Utilizing Generic Network Virtualization Encapsulation (GENEVE) tunnels, programs implement overlay-based networking for Kubernetes clusters, allowing pod-to-pod communication.

- uses OVN load balancers to implement EndpointSlices and Kubernetes services.

- Utilizing OVN Access Control Lists (ACLs), Kubernetes NetworkPolicies and AdminNetworkPolicies are implemented.

- By leveraging OVN ECMP routing features, multiple External Gateways (MEG) enable multiple dynamically or statically assigned egress next-hop gateways.

- applies Differentiated Services Code Point (DSCP) for Quality of Service (QoS) to traffic leaving the cluster via OVN QoS.

- gives administrators the option to send egress traffic from cluster workloads to external IP addresses by using an EgressIP that they have configured.

- enables the use of OVN Access Control Lists to limit egress traffic from cluster workloads (Egress Firewall).

- gives users the option to use OVS Hardware Offload to transfer networking tasks from the CPU to the NIC, improving data-plane performance.

- supports Dual-Stack IPv4/IPv6 clusters.

- uses VXLAN tunnels to enable hybrid networking to support mixed Windows and Linux clusters.

- uses relays and OVN IGMP snooping to provide IP Multicast.

The Legacy Kubernetes Architecture of OVN

Prior to delving into the OVN Kubernetes architectural modifications, it is imperative that we comprehend the OpenShift 4.13 and below legacy architecture, along with some of its constraints.

Parts of the Control Plane

These parts are limited to operating on the cluster’s controller nodes. These are containers that are operating in the openshift-ovn-kubernetes namespace within the ovnkube-master pod:

- OVN Kubernetes Master, or ovnkube-master: With regard to Nodes, Namespaces, Pods, Services, EndpointSlices, Network Policies, and other CRDs (Custom Resources Definitions), this is the brains behind the OVN Kubernetes plugin. It monitors the Kubernetes API and receives associated cluster events. Every cluster event is converted into an OVN logical element, including a router, switch, load balancer, policy, NAT, ACL, port group, and address set. The Northbound database leader of OVN is then updated with these logical components. In addition, it manages the pod IPAM allocations that are marked on the Pod objects and establishes the cluster’s logical network topology.

- An OVN native component called nbdb (OVN Northbound Database) is used to store the logical entities produced by the OVN Kubernetes master.

- The OVN native daemon known as northd (OVN North daemon) transforms logical entities into lower layer OVN logical flows while continuously monitoring the Northbound database. These logical flows are inserted into the Southbound database of OVN.

- OVN Southbound Database (sbdb): The logical flows produced by OVN Northd are stored in this native OVN component.

RAFT connects the NBDB and SBDB containers that are spread out across the control plane stack. As a result, consistency is preserved across the three database replicas, since only one replica is a leader at any given moment and the other two are followers.

Components for Each Node

Every node in a cluster has these components installed. These are containers in the openshift-ovn-kubernetes namespace that are operating in the ovnkube-node pod:

- The OVN Kubernetes Node, or ovnkube-node, is a process that runs on every node and is accessed by the kubelet/CRI-O components as a CNI plugin. It carries out requests for CNI Adds and Deletes. It generates the corresponding virtual ethernet interface for the pods on the host networking stack after digesting the IPAM annotation that OVN Kubernetes Master wrote. Additionally, it programs the unique IPTables and OpenFlows needed to set up Kubernetes services on every node.

- An OVN native daemon called ovn-controller (OVN Controller) monitors the logical flows in the OVN Southbound database, transforms them into OpenFlows (physical flows), and programs them onto the Open vSwitch that is operating on each node.

Open vSwitch operates on every node as a system process. Through software-defined concepts, networking is enabled by this programmable virtual switch, which also implements the OpenFlow specification.

Scale-Related Limitations

There were various difficulties with this architecture:

- Instability: The core of the entire cluster is the OVN Northbound and Southbound databases. The RAFT consensus consistency model binds them together. This has historically led to a lot of split-brain problems, where databases eventually diverge and lose consensus, particularly when they are large in size. These problems cannot be fixed with workarounds, and frequently require OVN databases to be rebuilt from the ground up. This has a non-isolated effect and stops the networking control plane of the entire cluster. This has proven to be an especially difficult update.

- Scale bottlenecks: The ovn-controller container connects to the Southbound database for logical flow information, as shown in the diagram above. This indicates that each Southbound is managing N/3 connections (HA mode) from ovn-controllers on sizable clusters with N nodes. In the past, this has caused scalability problems because the centralized Southbound databases can no longer handle the volume of data that OVN-controllers in big clusters are being served.

- Performance bottlenecks: All Kubernetes pod, service, and endpoint objects across all nodes are stored and processed by the OVN Kubernetes brain, which is the hub of the entire cluster. This consequently results in increased operational latency and high resource consumption on the control plane nodes as each OVN-controller keeps an eye on the global Southbound databases for changes pertaining to all nodes.

- Non-restricted infrastructure traffic: Infrastructure network traffic travels from a specific worker node to the master nodes because OVN-controllers are communicating with the central Southbound databases. This traffic traverses cluster boundaries in a way that is difficult to control in the HyperShift scenario where the hosted cluster’s control plane stack resides on the management cluster.

The architecture of OVN Kubernetes Interconnect

To address the aforementioned problems With OpenShift 4.14, a new OVN Kubernetes architecture was introduced, with the majority of control-plane complexity being delegated to each cluster node.

Parts of the Control Plane:

With just one container, ovnkube-cluster-manager (OVN Kubernetes ClusterManager), operating in the ovnkube-control-plane pod within the openshift-ovn-kubernetes namespace, the control plane is incredibly light. This is the main component of the OVN Kubernetes plugin, which monitors the Kubernetes API server and gathers events related to Kubernetes nodes. Its primary duty is to distribute non-overlapping pod subnets to each cluster node. It has no understanding of the OVN stack that underlies it.

Per Node Components:

Each node runs a separate instance of the OVN Kubernetes Controller, which is the local brain of the cluster. Other components include OVN databases, the OVN North daemon, and OVN Controller containers, all of which are running in the ovnkube-node pod within the openshift-ovn-kubernetes namespace. As a result, the data plane is heavier. The legacy OVN Kubernetes Master and OVN Kubernetes Node containers are combined to form OVN Kubernetes Controller. This local brain generates the required entities into the local OVN Northbound database while keeping an eye out for Kubernetes events that are pertinent to this node. These constructs are transformed into logical flows by the local OVN North daemon and saved in the local OVN Southbound database. The OVN Controller programs the OpenFlow to Open vSwitch by establishing a connection with the nearby Southbound database.

Enhancements

- Stability: Every node has local access to the OVN Northbound and Southbound databases. All of the “split-brain” problems are avoided because they are operating in the standalone mode, which does away with the requirement for RAFT. This isolates the impact to that node alone in the event that one of the databases fails. As a result, the OVN Kubernetes stack is now more stable, and customer escalation resolution is now easier.

- Scale: The ovn-controller container connects to the local Southbound database to obtain logical flow information, as shown in the diagram above. This indicates that each Southbound database on big clusters with N nodes is only managing one connection from a single local ovn-controller. We are now able to scale horizontally with the number of nodes since the scale bottlenecks from the centralized model have been eliminated.

- Performance: Only Kubernetes pods, services, and endpoint objects that are pertinent to that node are stored and processed by the OVN Kubernetes brain, which is now local to each cluster node (note: some features like NetworkPolicies need to process pods running on other nodes). Thus, there is an improvement in operational latency as the OVN stack processes less data. The lighter weight of the control plane stack is an additional advantage.

- Security: Since each node now contains the infrastructure network traffic between the OVN Southbound database and the ovn-controller, there is less chatter between nodes overall (OpenShift) and between clusters (HostedControlPlane), allowing for increased traffic security.

Readers may be wondering if they specifically need to configure additional resources on their workers now that the complexity has been shifted from control to data planes. Thus far, our comprehensive scale testing has not revealed the need for any such extreme actions. But as this post’s introduction made clear, there isn’t a one size fits all solution.

Negative aspects

The load added to the Kubernetes api-server will be higher than the legacy OVN Kubernetes architecture in OpenShift 4.13 and lower, and similar to the legacy OpenShift SDN network plugin because each node has a local OVN Kubernetes brain rather than a centralized brain.

Because each node in the new architecture has its own OVN Kubernetes controller and OVN database, one potential problem may be determining whether the entities created in each of the many OVN databases are accurate on a feature-by-feature basis, particularly when dealing with large scale.