Performance and Scalability of OpenShift Virtualization

By combining containerized apps with virtual machine (VM) deployment and management in a cloud-native fashion, Red Hat OpenShift Virtualization facilitates the removal of obstacles to workload. Since the early days of the KubeVirt open source project, we have been actively involved in measuring and analyzing virtual machines (VMs) running on OpenShift as part of the larger Performance and Scale team. Through workload optimization, scale testing, and new feature evaluation, we have also contributed to the development of the product. This article delves into multiple areas of interest and offers supplementary perspectives on managing virtual machine workloads on OpenShift.

Guidelines for tuning and scaling

To ensure that clients get the most out of their virtual machine deployments, our team contributes to tuning and scaling documentation. First, you can find this knowledge base article with an overall tuning guide. This guide covers different tuning options at the host and VM levels to enhance workload performance, as well as suggestions for optimizing the Virtualization control plane for high VM “burst” creation rates.

Secondly, we published an extensive reference architecture that includes an OpenShift cluster, a Red Hat Ceph Storage (RHCS) cluster, and network tuning details. It also examines the scheduling of boot storms and sample VM deployments, scaling I/O latency performance, VM migration and parallelism, and large-scale cluster upgrade execution.

Areas of focus for the team

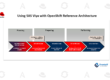

An outline of some of our main areas of interest and specifics regarding the testing we conduct to evaluate and enhance the performance of virtual machines running on OpenShift are given in the following sections. The areas of focus are depicted in Figure 1 below.

Workload efficiency

To ensure we have broad coverage, we devote a lot of time to key workloads covering networking, storage, and compute components. In order to achieve optimal performance, this work involves obtaining ongoing baselines on various hardware models, updating results as new releases are made available, and thoroughly examining various tuning options.

Database performance is one important area of workload focus. As the workload driver, HammerDB is usually used. We concentrate on several database types, such as MariaDB, PostgreSQL, and MSSQL, to gain insight into the performance of these databases. A HammerDB VM definition example is provided in this template.

SAP HANA, a high throughput in-memory database with a target of 10% of bare metal performance, is another important workload focus area. We accomplish this by implementing a few isolation-style tweaks at the host and virtual machine (VM) layers. These tweaks include using CPUManager, modifying systemd-controlled process affinity, supporting the VM with hugepages, and utilizing SRIOV network attachments.

We use the Vdbench workload to run a series of various I/O application patterns that focus on latency and IOPs (input/output operations per second) in order to further cover storage performance. The block size, I/O operation type, number and size of files and directories, and read/write ratio are all changed by the application patterns. This enables us to discuss diverse I/O behaviors in order to comprehend various performance attributes. In order to measure different storage profiles, we also run Fio, another popular storage microbenchmark. We test various persistent storage providers, but we primarily use Block mode RADOS Block Device (RBD) volumes in virtual machines (VMs) to test OpenShift Data Foundation.

To complete some of these more complicated workloads, we also concentrate on various kinds of microbenchmarks to evaluate the performance of other components. In order to measure Stream and RequestResponse test configurations for different message sizes and thread counts, we usually use the uperf workload for networking. We concentrate on the default podnetwork as well as other container network interface (CNI) types, like Linux Bridge and OVN-Kubernetes additional networks. Depending on the focus area, we employ a range of benchmarks for compute tests, including stress-ng, blackscholes, SPECjbb2005, and others.

Testing for regression

We continuously run workload configurations using an automation framework called benchmark-runner, comparing the outcomes to established baselines in order to identify and address any regressions in OpenShift Virtualization pre-release versions. We use bare metal systems to run this continuous testing framework because virtualization performance is important to us. To gain an understanding of relative performance, we compare workloads across pods, virtual machines, and sandboxed containers that have similar configurations. With the help of automation, we can now install OpenShift and its operators—OpenShift Virtualization, OpenShift Data Foundation, Local Storage Operator, and OpenShift sandboxed containers—quickly and efficiently.

We can identify any regressions before they are made available to customers by characterizing the performance of pre-release versions several times a week. This also helps us track performance improvements as we update to newer releases with better features.

Database benchmarks, compute microbenchmarks, uperf, Vdbench, Fio, and both VM “boot storm” and pod startup latency tests that exercise different parts of the cluster to measure how quickly a bulk number of pods or VMs can be started at once are among the workloads we currently regularly run. We constantly expand the scope of our automated workload coverage.

Migration efficiency

The ability of virtual machine workloads to live migrate during cluster upgrades more smoothly is one benefit of utilizing a shared storage provider that supports RWX access mode. We continuously seek to increase the speed at which virtual machines (VMs) can migrate without appreciably impacting workload. In order to provide safe default values, this entails testing much higher limits in order to identify bottlenecks in the migration components and testing and recommending migration limits and policies. In addition, we quantify the advantages of setting up a dedicated migration network and characterize the migration progress over the network by examining node-level networking and per-VM migration metrics.

Increasing output

We test high-scale environments on a regular basis to find bottlenecks and assess tuning choices. Our scale testing covers tasks like workload I/O latency scaling, migration parallelism, DataVolume cloning, OpenShift control plane scale, Virtualization control plane scale, and VM “burst” creation tuning.

We’ve found a number of scale-related bugs during this testing, which has finally resulted in improvements and allowed us to raise the bar for the upcoming round of scale tests. We record any best practices related to scaling that we come across in our general Tuning and Scaling Guide.

Performance of hosted clusters

Our research is focused on the performance of hosted control planes and hosted clusters using OpenShift Virtualization, specifically on-premises bare metal hosted control planes and hosted clusters using the KubeVirt cluster provider.

Our initial focus areas are: scale testing multiple instances of etcd (see the storage recommendation in the Important section), managing hosted control plane clusters on OpenShift Virtualization, and hosted workload performance when testing hosted control planes with heavy API workloads. Check out one of the main results of this recent work by visiting our hosted cluster sizing guidelines.