Red Hat OpenShift on Google Cloud

Here in this blog, we are going to learn about Red Hat OpenShift on Google Cloud.

The best platform for building, deploying, and running cloud-native business apps from a hybrid cloud to the edge is Red Hat OpenShift. It offers self-service provisioning for developers, full-stack automated operations, security across the whole application development process, and a uniform user experience across all environments.

You may rapidly and simply use cloud computing thanks to Google Cloud, one of the top cloud vendors. With its Vertex AI solution, it has focused primarily on AI workloads, making it simple to create, train, manage, and deploy AI models in the cloud.

Google Cloud offers Red Hat OpenShift in a variety of methods, including: by oneself in Bring your own membership or purchasing one straight from the Google Cloud marketplace, and then setting spending restrictions based on your Google Cloud use Commits.

Customers can also use Red Hat OpenShift Dedicated, a managed cloud service powered by Google Cloud and available through console.redhat.com and soon from the Google Cloud marketplace. Having a team of site reliability engineers maintain the application platform enables them to concentrate more on the application lifecycle.

Remember that regardless of whether they are hosted on Google Cloud or in a data center, all the different Red Hat OpenShift versions will provide a fairly comparable user experience and functionality.

See the following demonstration for additional information on how to set up a Red Hat OpenShift cluster running Google Cloud:

The advantages of a hybrid approach

If you had access to petabytes of useful data, you might utilize it to significantly enhance consumer satisfaction. a shopper recommendation system, for instance.

the difficulty? For any reason, your data cannot be left on-prem.

How about creating brand-new picture recognition software for the medical industry utilizing patient data?

You can probably already see where this is going: in order to prevent sensitive data from leaking outside of your premises, training models frequently requires running them where the data is. This is especially relevant when masking or anonymizing the data is difficult.

Data gravity is an effect where actions are carried out close to the data because the data cannot be moved for compliance, cost, or other reasons.

To reduce cloud egress costs, maximize data value potential, and de-risk compliance management, many businesses are deciding to stay or move to on-prem, on/nearshoring.

On-premises infrastructure by itself, nevertheless, often does not offer the best answer. Numerous benefits come with cloud computing and storage, such as easy application exposure and access to practically infinite resources.

A hybrid cloud method can be used in this situation. You can distribute your MLOps (Machine Learning Operations) workflow and carry out particular operations where they are most appropriate by leveraging tools and solutions that operate consistently regardless of their operating environment.

As an illustration, anonymize raw data first to comply with data regulations before performing a burst training session in the cloud to accelerate it with more resources. As an alternative, manage data processing and training on-premises while serving the model on the cloud to make cloud application integration simpler.

Integration of Red Hat OpenShift and Google Cloud in a Hybrid Architecture

A hybrid approach is made possible by combining Google Cloud and Red Hat OpenShift. This strategy takes advantage of Google Cloud’s cutting-edge cloud computing and AI capabilities as well as Red Hat OpenShift’s capacity to offer consistent behavior across the two environments.

OpenShift AI, a modular MLOps platform that provides AI tooling as well as flexible integration options with a variety of independent software suppliers (ISVs) and open-source components, is a crucial part of this design.

The lifecycle of ML models is managed centrally through the Vertex AI Model Registry. It offers a summary for planning, monitoring, and instructing new versions. Assign a new model version to an endpoint straight from the registry, or deploy models to an endpoint via aliases.

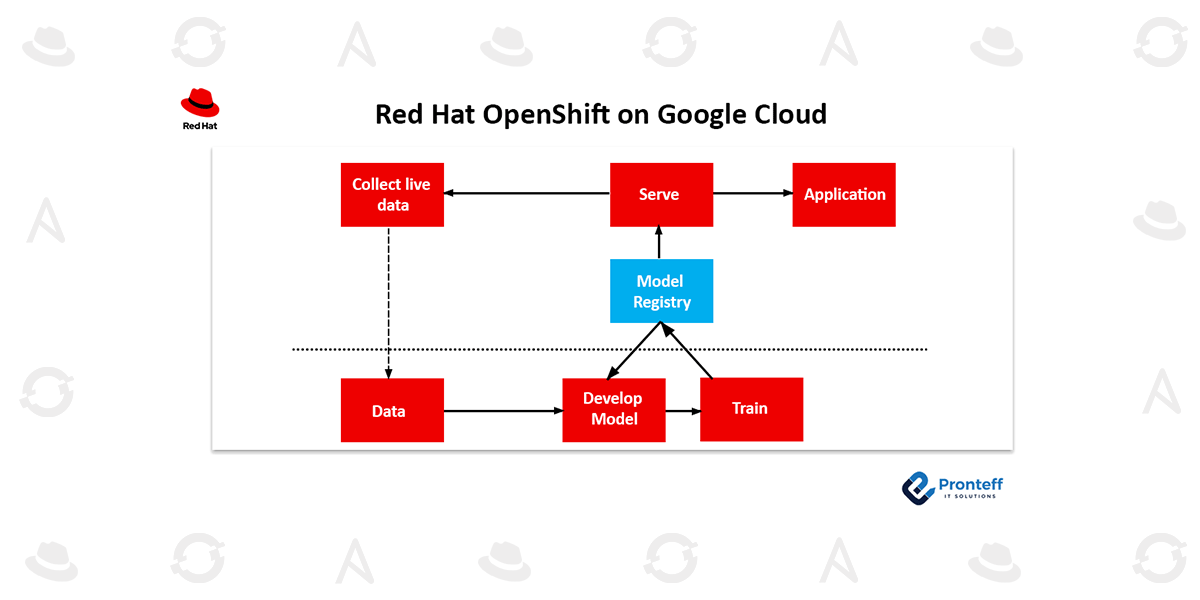

On our on-site servers, we collect and handle the data for this sample workflow. After that, test the data and create a model architecture. Finally, run that model locally.

We could skip the “Develop model” stage if all that is needed is to retrain an existing model.

When the model has been trained, we upload it to the Google Cloud model registry for testing and serving.

We collect analytics while it is serving to ensure good live performance. Additionally, we gather data and send it back to our on-site data lake.

We can easily switch between the on-premise and cloud clusters because Red Hat OpenShift is used as the underlying application platform for each of them. This is because the environment and tools are already familiar to us.

Next, what?

Although the small example of a hybrid architecture we provided above only scratches the surface, it is only the beginning. When deployed to different edge locations or across several cloud environments, a hybrid MLOps platform can do so while still maintaining centralized control of the deployed models.

By adding automated pipelines that retrain the models in response to data drift or at predetermined intervals, the MLOps workflow can be further improved. The workflow can also be integrated with a wide range of other parts that can help the process of developing and deploying models as a whole.

No matter which path you select, a hybrid cloud MLOps architecture provides a solid base for integrating AI into vital business data and production applications.