Staying Up-to-date with EUS-to-EUS Updates

Updates from EUS-to-EUS are now available.

OpenShift now allows customers to update between two Extended Update Support versions with only a single restart of non-Control-Plane nodes, starting with the update between 4.8 and 4.10. While following Kubernetes Version Skew Policies, which require serialized updates of all components other than the Kubelet, this decreases total update duration and workload restarts.

The Advantages of EUS-to-EUS Updates

The Kubernetes and OpenShift communities move quickly, with new minor versions released every three months in the past and only later moving to a four-month release cycle. Some customers have fallen behind due to the rapid pace of innovation and the necessity that all Kubernetes minor version changes be serialized. Customers that fall behind will have to put in the time and effort to catch up to the most recent edition.

We’re decreasing in half the number of maintenance events necessary to maintain a supported long-lived cluster by allowing administrators to upgrade their clusters between EUS versions with decreased workload disruption and overall update length.

Note that EUS-to-EUS upgrades are not available in Single-Node OpenShift (SNO) or Compact Cluster topologies because all nodes are Control Plane nodes.

A EUS-to-EUS Update is carried out.

A EUS-to-EUS update is relatively simple to carry out. Prior to updating from 4.8 to 4.9, you must first pause non-Control-Plane MachineConfigPools (MCPs), then update the cluster from 4.8 to 4.9, then update from 4.9 to 4.10, and finally unpause any MCPs you paused at the start of this process.

The table below shows which parts of the cluster are updated at each of the major steps during the EUS-to-EUS update process from 4.8 to 4.10. It’s critical to realize that the cluster will run OCP 4.9 as a stage in the process.

The Result:

When worker nodes are updated from RHCOS 4.8 to RHCOS 4.10, workloads are only resumed once.

In a 20 node cluster (in our tests), the entire duration of a EUS-to-EUS update can be decreased by up to 70 minutes when compared to a full update from 4.8 to 4.9 to 4.10, where it takes only 5 minutes to drain and update each worker. Due to workload or infrastructure factors such as bare metal hosts, which can take 10-15 minutes to reboot, the time to drain and reboot nodes in many client scenarios is significantly longer. Reducing the number of reboots in clusters with multiple nodes will save time and allow customers to stay within their maintenance periods.

Guard Rails Are Here To Stay

We wanted to make sure that the product protected you from upgrading too quickly and perhaps breaking compatibility matrices when we introduced EUS-to-EUS changes.

In order to ensure that nodes do not breach version skew regulations, we implemented adjustments. When your workers must catch up before commencing the next minor version upgrade, for example, you’ll get a warning.

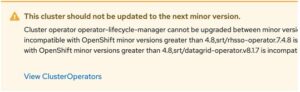

We added MaxOpenShiftVersion to Operator Lifecycle Manager (OLM) operators to restrict customers from updating their OCP cluster before upgrading the deployed operator version to any distribution that the operator supports. OLM Operators that rely on APIs that were removed in Kubernetes 1.22 and OpenShift 4.9 are frequently identified using this new feature. When the operator is incompatible with the OpenShift version, for example, a warning appears in the admin console:

Updates to OpenShift are now much faster.

We implemented some substantial general update enhancements, notably for multi-node OpenShift clusters, in addition to EUS-to-EUS updates. A 250 node cluster’s non-worker update time was lowered from 7.5 hours to 1.5 hours. Because a EUS-to-EUS update still includes two non-worker node upgrades, the impact of these modifications is quadrupled, saving an additional 12 hours for a 250-node cluster update.

Scheduling that is aware of updates

The Machine Config Operator and Scheduler interact in OpenShift 4.10 to prioritize rescheduling pods toward nodes that have already been updated. If Pod-1 was originally scheduled to Node-A before this change in 4.10, it may have been rescheduled to Node-B, then Node-C, then Node-D, and finally back to Node-A in the worst-case situation. As seen in the diagram, this translates to a total of four pod restarts:

This is far less likely now, thanks to the modifications made in 4.10. In the worst-case scenario, Pod-1 is now rescheduled from Node-A to Node-B, then back to Node-A, assuming sufficient capacity. Pod-2 is rescheduled from Node-D to Node-C in the best-case scenario.

All existing scheduling requirements, such as zone affinity or anti-affinity, and volume attachment limits, are still in effect. Based on your workload design, those limits may limit the effectiveness of these enhancements.

Try It Out For Yourself

Until Red Hat has promoted Updates from 4.9 to 4.10 into the Stable and EUS channels, the full EUS-to-EUS Update experience will not be available in the EUS channels. However, you can test this Update pattern today by following the same procedure; the only difference is that instead of switching from eus-4.8 to eus-4.10 channel, you’ll need to switch from stable-4.8 to stable-4.9 channel before upgrading to 4.9, and then to the fast-4.10 channel before upgrading to 4.10.