Applying resources in a specific order based on policy dependencies

Overview

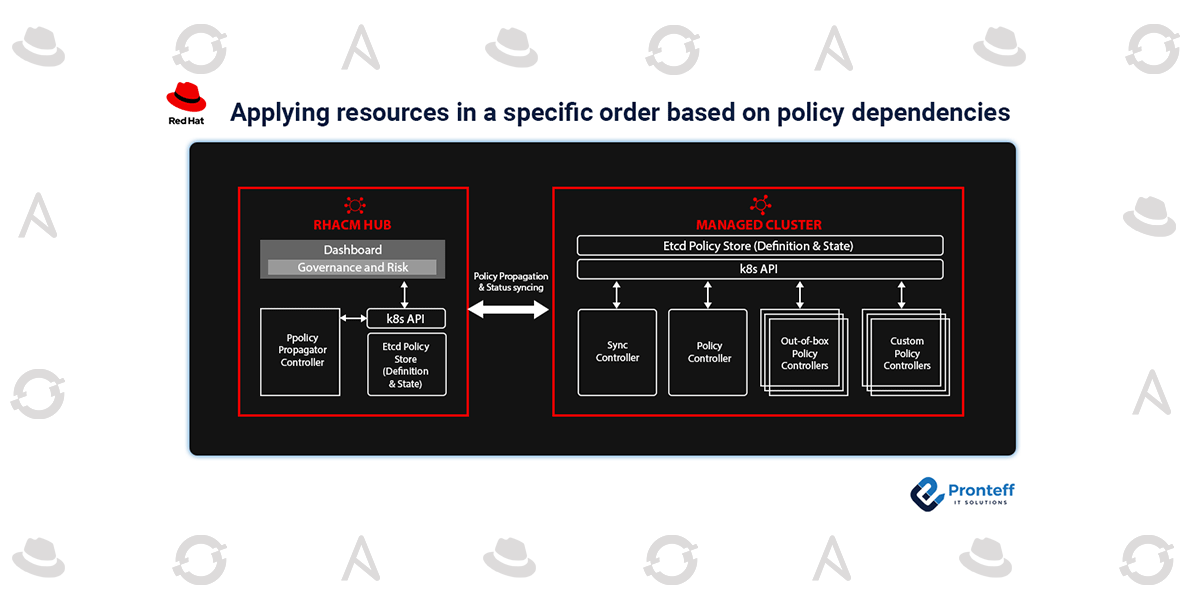

The desired states for managed clusters within a fleet can be specified by policies in Red Hat Advanced Cluster Management for Kubernetes (RHACM). It is frequently necessary to implement multiple policies in order to achieve a single goal, such as installing and configuring an operator. The policy dependencies framework offers the capacity to logically group numerous objects into a single policy and numerous policies into a policy set, but up until now, it lacked a method for applying policies in a particular sequence.

New features for the policy framework were added in the RHACM 2.7 version, allowing policy authors to specify the conditions under which a policy should be executed based on the compliance of other rules on the managed cluster. Spec.dependencies and spec.policy-templates, for instance [*].extraDependencies Before attempting to construct an instance of a custom resource, an author can make sure that definition and the operator managing it are installed by using extraDependencies. This may lessen the workload on the Kubernetes API server and the noise generated by violations inside a cluster.

For a tutorial on using spec.dependencies and spec.policy-template[*], keep reading.extraDependencies to coordinate the implementation of two use-case-specific policies. In the first illustration, the Strimzi operator is installed using policies, and a Kafka cluster is built using policy dependencies to guarantee that procedures are followed in the proper sequence. The second example demonstrates how to automatically correct infractions by activating a policy when something isn’t compliant.

Policy dependency overview

It’s crucial to comprehend how the policy resources are set up before using the new fields. While the extraDependencies field specifies additional needs for a particular policy template, the dependencies field specifies criteria for everything in a policy. Both fields are lists of objects with the following fields, and they share the same structure.

apiVersionkindnamenamespacecompliance

In order to learn more details about each field in the documentation. The namespace and compliance fields, however, demand more attention. Given that dependency are verified for each managed cluster and that configuration policies often live within the cluster namespace (with a distinct name for each cluster), the namespace field may change. In order for the framework to use the cluster namespace dynamically, this can be fixed by leaving the namespace field in a dependency empty.

The compliance field is the prerequisite that the policy requires. The dependency is deemed satisfied when the value supplied here matches the value in the targeted object’s status.compliant field. The policy specifies the dependency is in a Pending state when the target item does not exist or its status does not match.

For example installing Kafka with Strimzi

Strimzi offers an operator-based method for setting up and controlling Apache Kafka instances on a Kubernetes cluster. A frequent pattern that has certain dependencies by nature is using policies to manage an object that is managed by an operator. To ensure that the operator is installed before the object it manages in this example, policies need to be written.

The following policy will add the operator to the namespace for myapp:

apiVersion: policy.open-cluster-management.io/v1

kind: Policy

metadata:

name: myapp-strimzi-operator

namespace: default

spec:

disabled: false

policy-templates:

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: myapp-strimzi-operatorgroup

spec:

remediationAction: enforce

severity: medium

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: myapp-strimzi

namespace: myapp

spec:

targetNamespaces:

- myapp

upgradeStrategy: Default

- extraDependencies:

- apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

name: myapp-strimzi-operatorgroup

namespace: ""

compliance: Compliant

objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: myapp-strimzi-subscription

spec:

remediationAction: enforce

severity: medium

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: strimzi-kafka-operator

namespace: myapp

spec:

channel: stable

installPlanApproval: Automatic

name: strimzi-kafka-operator

source: community-operators

sourceNamespace: openshift-marketplace

startingCSV: strimzi-cluster-operator.v0.32.0

- extraDependencies:

- apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

name: myapp-strimzi-subscription

namespace: ""

compliance: Compliant

objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: myapp-strimzi-csv-check

spec:

remediationAction: inform

severity: medium

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operators.coreos.com/v1alpha1

kind: ClusterServiceVersion

metadata:

namespace: myapp

spec:

displayName: Strimzi

status:

phase: Succeeded

reason: InstallSucceeded

Because the Red Hat OpenShift Container Platform is used, it is assumed in this policy that the myapp namespace already exists and that the Strimzi operator is part of the community-operators catalog source in the OpenShift-marketplace namespace. These specifics can be included in new rules, and dependencies can be used to guarantee they are implemented first.

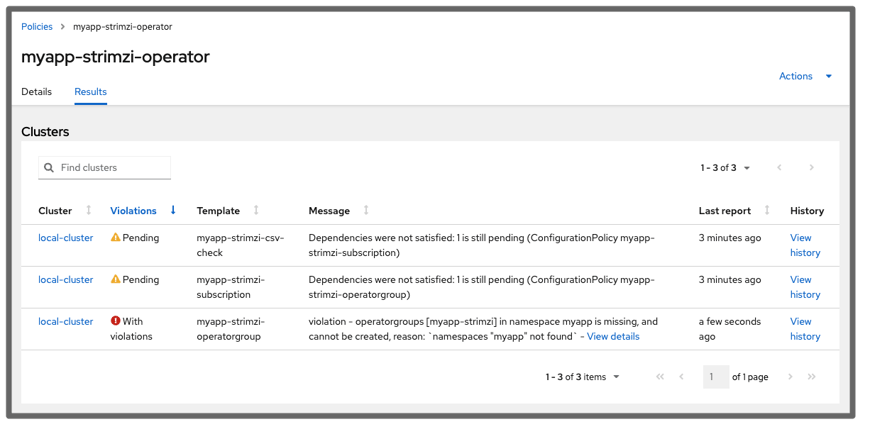

This operator policy makes use of the extra dependencies parameter to ensure that the templates are applied sequentially. The ClusterServiceVersion is not checked until after the Subscription has been established, and the Subscription is not created until the OperatorGroup is present. Because the configuration policies are in the cluster namespace, each dependent uses an empty namespace field.

The other templates are shown as Pending in the RHACM Governance console if the namespace has not yet been created, and the status messages from the table explain why they are pending. Look at the example below:

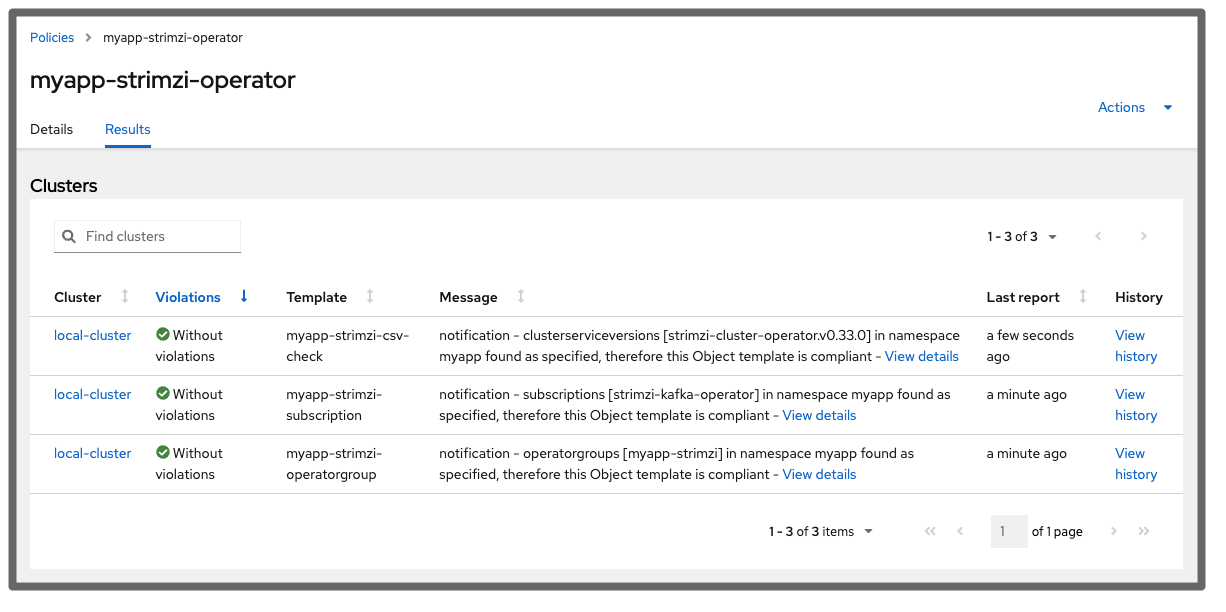

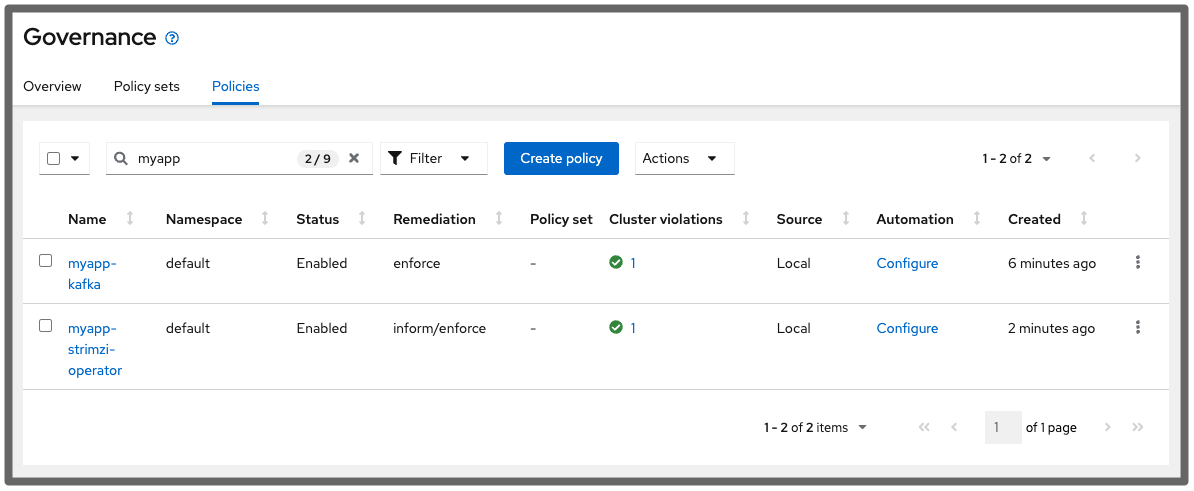

Following the creation of the namespace and the completion of the installation, everything becomes compliant after a short while:

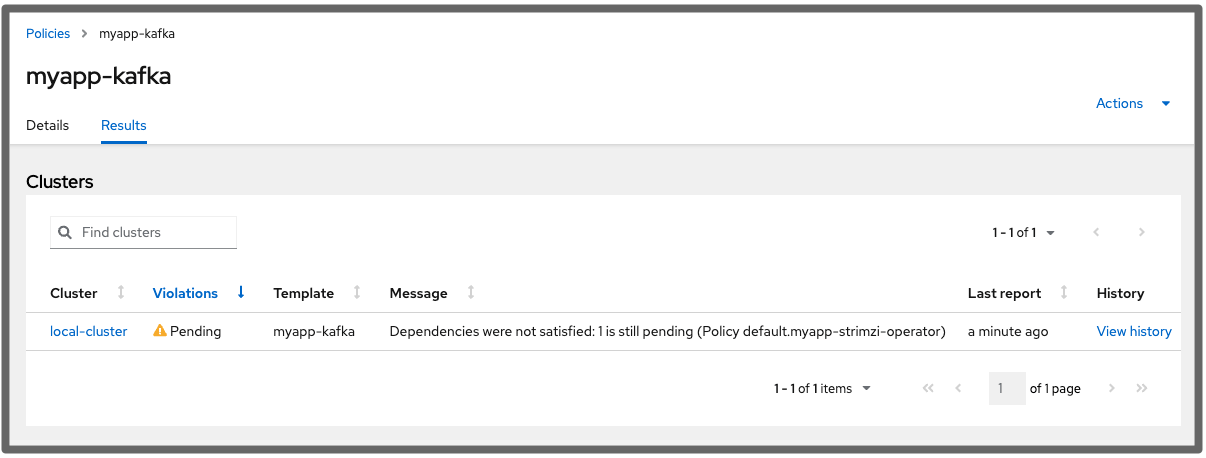

Another policy builds a Kafka instance when the operator is installed. This policy leverages the dependencies field to only apply once the operator has been installed successfully. View the ensuing regulation:

apiVersion: policy.open-cluster-management.io/v1

kind: Policy

metadata:

name: myapp-kafka

namespace: default

spec:

disabled: false

dependencies:

- apiVersion: policy.open-cluster-management.io/v1

kind: Policy

name: myapp-strimzi-operator

namespace: default

compliance: Compliant

policy-templates:

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: myapp-kafka

spec:

remediationAction: enforce

severity: medium

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:

name: my-cluster

namespace: myapp

spec:

kafka:

config:

offsets.topic.replication.factor: 3

transaction.state.log.replication.factor: 3

transaction.state.log.min.isr: 2

default.replication.factor: 3

min.insync.replicas: 2

inter.broker.protocol.version: "3.3"

storage:

type: ephemeral

listeners:

- name: plain

port: 9092

type: internal

tls: false

- name: tls

port: 9093

type: internal

tls: true

version: 3.3.1

replicas: 3

entityOperator:

topicOperator: {}

userOperator: {}

zookeeper:

storage:

type: ephemeral

replicas: 3

The Kafka instance policy is marked as Pending until the operator policy complies:

Following creation, both policies are in compliance:

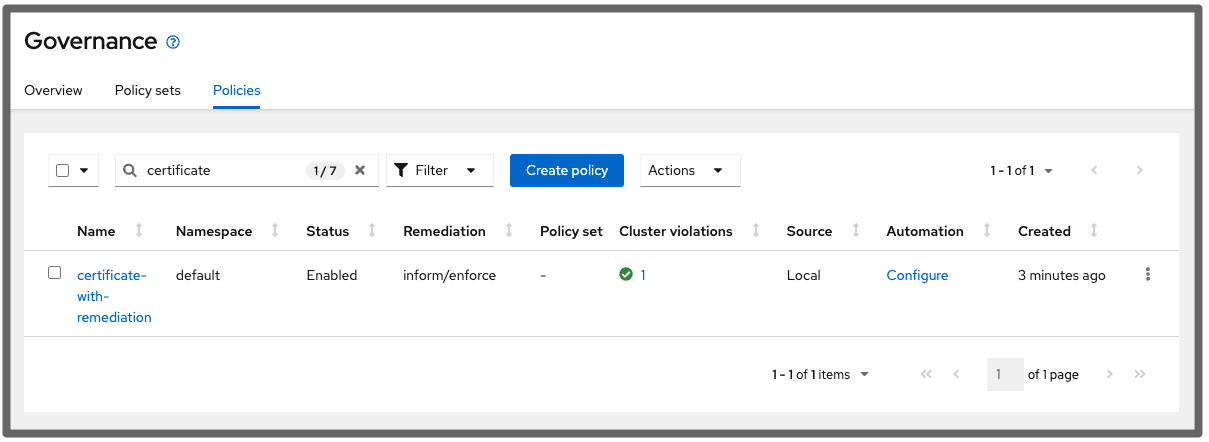

For example, refreshing a certificate

Other policy types may not always have this capability, however, configuration policies can be set to enforce mode, automatically correcting violations by making modifications to objects inside the cluster. For example, certificate policies are able to detect when a certificate is about to expire but are unable to renew it on their own.

This example makes use of a hypothetical container image that might be applied to a Kubernetes job to update a certificate. To automate certificate updates in a certain namespace, an application administrator might generate such an image.

This example policy generates a task whenever a certificate in the myapp namespace is scheduled to expire within six hours (6h) using a policy dependency:

apiVersion: policy.open-cluster-management.io/v1

kind: Policy

metadata:

name: certificate-with-remediation

namespace: default

spec:

disabled: false

policy-templates:

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: CertificatePolicy

metadata:

name: expiring-myapp-certificates

spec:

namespaceSelector:

include: ["myapp"]

remediationAction: inform

severity: low

minimumDuration: 6h

- extraDependencies:

- apiVersion: policy.open-cluster-management.io/v1

compliance: NonCompliant

kind: CertificatePolicy

name: expiring-myapp-certificates

namespace: ""

ignorePending: true

objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: refresh-myapp-certificates

spec:

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: batch/v1

kind: Job

metadata:

name: refresh-myapp-certificates

namespace: myapp

spec:

template:

spec:

containers:

- name: refresher

image: quay.io/myapp/magic-cert-refresher:v1

command: ["refresh"]

restartPolicy: Never

backoffLimit: 2

remediationAction: enforce

pruneObjectBehavior: DeleteAll

severity: low

When a reliance on a policy template is not met, it is often indicated as Pending and the policy is not regarded as compliant. Enable the ignorePending parameter in the template: ignorePending: true to make this policy Compliant when no action is required.

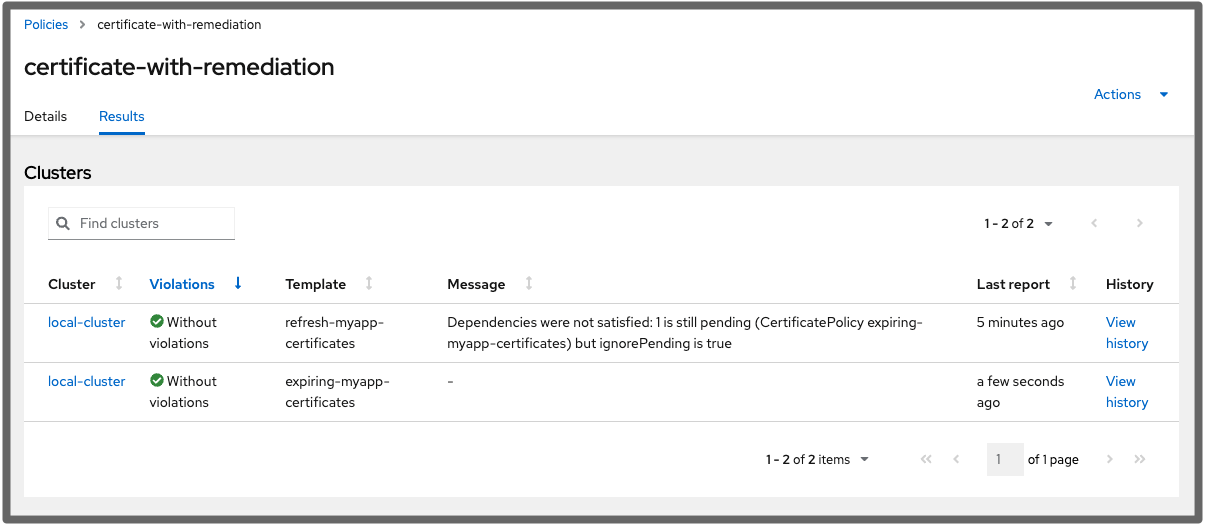

The policy is compliant in the console when no actions need to be made in relation to the cluster. Look at the illustration below:

The configuration policy is Pending yet is described as compliant in the message details:

When the template is idle, the configuration-policy-controller deletes the job using the pruneObjectBehavior: DeleteAll setting of this policy. In this manner, rather than using the task from the previous run, the configuration policy produces a new job each time it is active.

Conclusion

Policy authors may find policy dependencies an effective tool for defining relationships between the objects under control. This can make it easier for cluster administrators to spot the blocker in a line of policies that must be implemented in a specific sequence. The capability is also adaptable enough to enable the automation of engaging remedy workflows when a policy breaks compliance.

The example rules for Strimzi and Kafka in this blog post can be expanded to construct and set up persistent storage for the cluster. Although it might only be one more policy dependence, that configuration could be one or more distinct policies. For instance, determining whether a persistent volume exists. Different provisioners can be used on various clusters because the dependence does not define how that volume is created or provided.

As an alternative to a straightforward job, the certificate remediation example policy in this blog can also be expanded to a Tekton PipelineRun. That pipeline might determine which certificates require an update and provide that knowledge to another Task. The technique is reusable across many applications, and the policy dependency is kept to a minimum.