Importance of Cache usage in IBM API Connect

Here in this blog, we are going to learn the importance of cache usage in IBM API Connect

What is Cache in API Connect:

Cache in IBM API Connect refers to the process of storing and reusing frequently requested data or responses from APIs to improve performance and reduce the load on backend systems. By caching API responses, subsequent identical requests can be served faster, as the data is retrieved from the cache instead of going through the whole process of generating the response from the backend.

Example: Multiple API’s consuming a backend REST API and it is protected with Oauth token, with an expiry of 1 hour. When calling the REST API we need to generate token every time. This type of tokens can store in cache and no need to call every time until token expired.

Solution In IBM API Connect:

This backend REST APIs will be called through “invoke” policy in API Assembly as described below.

1st policy TokenCredentials:

In this we write a Gateway Script code in “Gatewayscript” Policy in Assembly for framing the Request for token Generation through token URL.

2nd policy TokenURLcalling:

This is the “invoke” Policy to call the Token URL with below configuration as shown below.

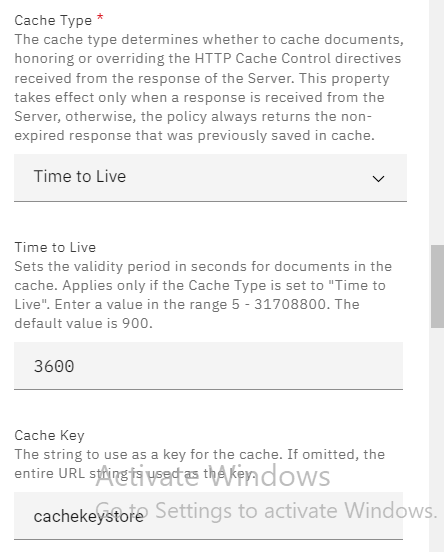

Cache Type: Time to Live

Time to Live: 3600(1hour)

Cache Key: give a name to take the token value.

3rd policy Cache policy for Backend:

This is a Gateway Script Policy in this we write a small piece of code to map the cache key when calling the Backend REST API.

4th policy BackendURL:

Here we calling the backend REST API with Security token.

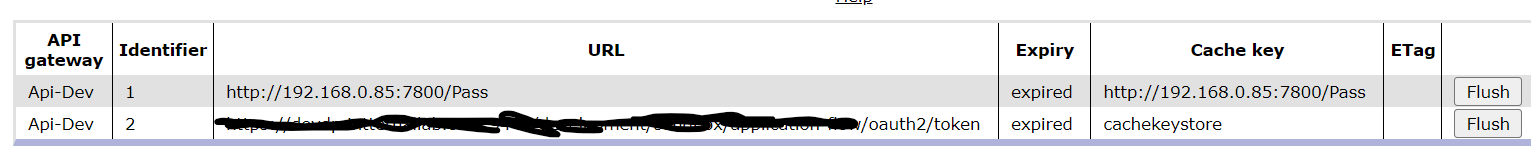

Observe the cache in IBM Data Power with respective domain. The object is “API Document Status” place.

Troubleshooting: Most of the case the token itself having the cache-putpost-response is enabled. If not add this line in .yaml “cache-putpost-response: true” under invoke policy.

Summary: This API was getting the token and stored in the cache it is passed the same token until it expired to Backend. With this feature it reduces the latency and API works faster.