OpenShift Container Platform Extension for AWS Local Zones

Here in this blog, we will learn about openShift Container platform Extension for AWS Local Zones.

With the help of local zones, you can use certain AWS services—such as compute and storage services—that are more conveniently located for end users and the metropolitan area than regular zones. This gives them extremely low latency access to locally running applications. Local Zones don’t require a hardware lease or purchase; instead, AWS owns and manages them entirely. AWS’s redundant and extremely high-bandwidth private network also connects Local Zones to the parent AWS cloud region, giving apps operating in Local Zones quick, safe, and seamless access to the remaining AWS services.

Zones on AWS and OpenShift

The following advantages may be enjoyed by service users and application developers who use OpenShift with Local Zones:

- putting resources closer to the user in order to enhance application performance and user experience. Local Zones speed up application load times and responsiveness by cutting down on the amount of time data takes to move over the network. Applications that need low latency and real-time data access, like online gaming and video streaming, should pay particular attention to this.

- Customers can save money by avoiding data transfer fees when hosting resources in particular regions. This helps them avoid paying high fees for data transfer fees, like cloud egress fees, which can be a big business expense when moving large amounts of data between regions for applications that deal with images, graphics, and video.

- offering regulated businesses, including healthcare, government organizations, financial institutions, and others, a means of meeting data residency requirements by storing data and applications in designated areas in accordance with legal requirements.

Limitations of AWS Local Zones in OpenShift

When deploying OpenShift, take note of these limitations in the current AWS Local Zones offering:

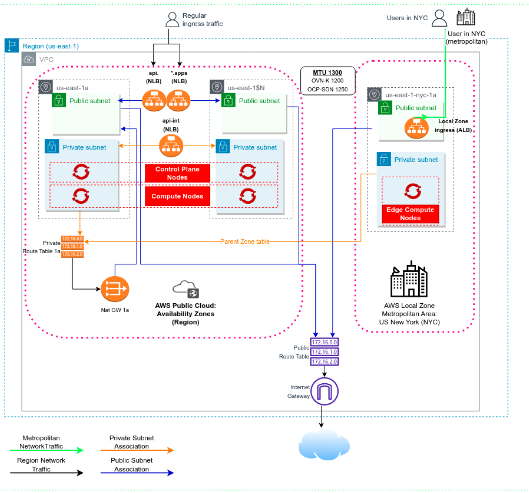

- Between an Amazon EC2 instance in the Local Zone and an Amazon EC2 instance in the Region, the Maximum Transmission Unit (MTU) is 1300. As a result, depending on the network plugin being used for the deployment, the overlay network MTU changes.

- The installer will not automatically deploy network resources like Network Load Balancer (NLB), Classic Load Balancer, and Nat Gateways because they are not globally available in AWS Local Zones.

- On standard AWS OpenShift clusters, the default volume type for node volumes and the storage class set is AWS Elastic Block Storage (EBS) volume type gp3. In Local Zones, this volume type is not globally accessible. Workloads created into Local Zone nodes must have the gp2-csi StorageClass set because they are deployed with the gp2 EBS volume type by default.

Setting up AWS Local Zones for an OpenShift cluster installation

The OpenShift Installer completely automates the cluster installation, including the configuration of network components in Local Zones, and this section explains how to deploy OpenShift compute nodes in Local Zones during cluster creation.

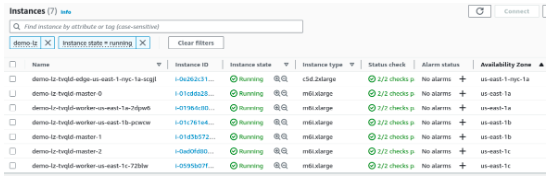

The infrastructure elements produced by the IPI installation are plotted alongside worker nodes in the Local Zone in the diagram that follows:

- Each Availability Zone in the Region has subnets in addition to one regular VPC.

- In us-east-1, there is a single standard OpenShift Cluster with three Control Plane and three Compute nodes.

- Subnets in the Local Zone of the New York metropolitan area, both public and private (us-east-1-nyc-1a).

- A single edge compute node located in the us-east-1-nyc-1a zone of the private subnet;

It is necessary to define the edge compute pool in the install-config.yaml file, which is not enabled by default, in order to deploy an OpenShift cluster extending compute nodes in Local Zone subnets.

As part of the installation process, machine set manifests are created for each location and the network components are created in the local zone, classified as “Edge Zone”. For further information, see the OpenShift documentation.

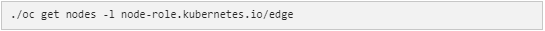

Following cluster installation, each node in the Local Zone has the label node-role.kubernetes.io/edge set in addition to the default label node-role.kubernetes.io/worker.

Required conditions

Set up the clients:

- Installer OpenShift 4.14+

- CLI for OpenShift

- Amazon CLI

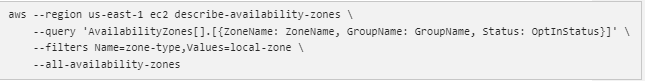

Step 1: Turn on the Local Zone Group for AWS..

Prior to creating resources in Local Zones, the zone group must consent to the zones being enabled there.

With the AWS CLI, you can use the following operation to list the available Local Zones along with their attributes: DescribeAvailabilityZones.

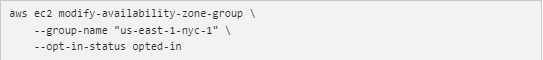

Run this command to enable New York’s Local Zone, which is used in this post:

Step 2: Establish an OpenShift Cluster.

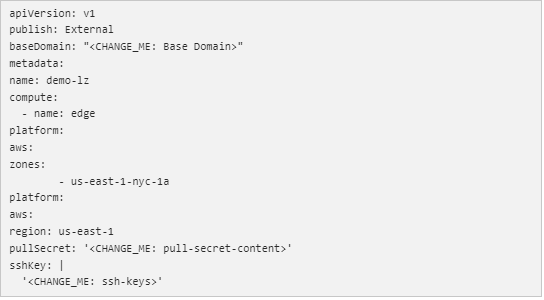

In the edge compute pool, create the install-config.yaml setting for the name of the AWS Local Zone:

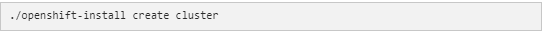

Establish the cluster:

That’s all; the installer software sets up all the configuration and infrastructure needed to extend worker nodes to the chosen location.

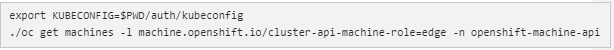

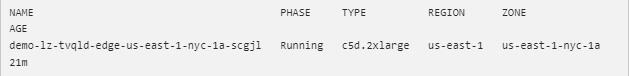

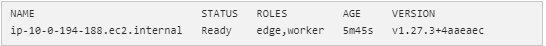

After installation is complete, check the status of the EC2 worker nodes that Machine API provided:

Additionally, you can examine the nodes labeled node-role.kubernetes.io/edge that are created in AWS Local Zones following the machine’s entry into the Running phase.

After installation, the cluster is prepared to execute workloads on Edge Compute nodes.

Additional activities permitted to improve Local Zones and OpenShift are described in the following links:

- Day 2 assignments: establishing workloads in local zones

- extending current clusters to local zones on AWS

- Operator of an Application Load Balancer

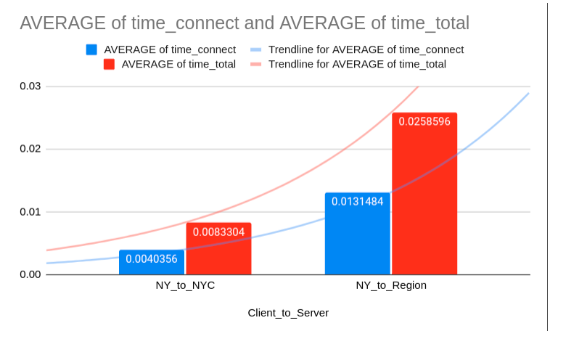

Comparing the connection time of the application

We extended the cluster installed in the previous section to create a node in a new Local Zone us-east-1-bue-1a in order to verify the network improvement when delivering the application closer to the user.

The tests quantify the network benefits of using edge compute pools in OpenShift by measuring client connectivity to the application endpoint deployed in the cluster from various internet locations, some of which are closer to the metropolitan regions covered by nodes deployed in Local Zones.

An overview of the tested environment can be seen in the picture below:

- Three clients are evaluating three servers, or endpoints.

- The clients are coming from South America, London (UK), and New York (US).

- Application dispersed among several locations within the same cluster: the availability zone in the region (us-east-1) Local Zone Buenos Aires (us-east-1-bue-1a), Local Zone New York (us-east-1-nyc-1a), and Local Zone

surroundings

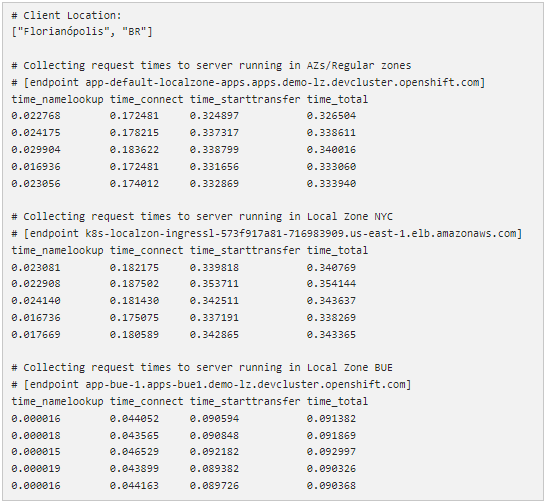

Through the generation of requests from various clients, the tests extract the curl variables and output them to the console.

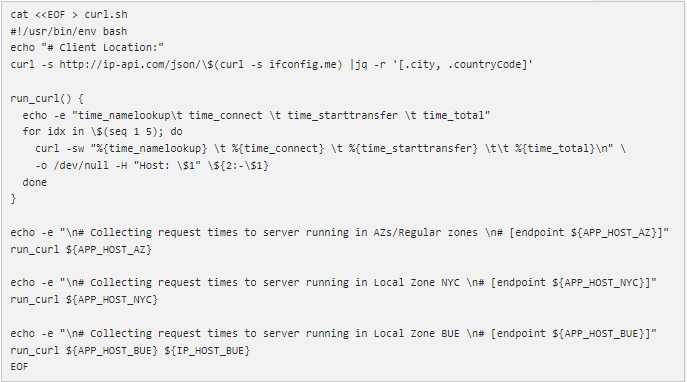

To test the endpoints, create the curl.sh script:

Run curl.sh after copying it to the clients.

Client-reported outcomes:

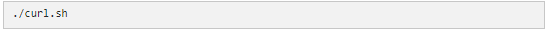

Outcomes for the client based in the US/New York region:

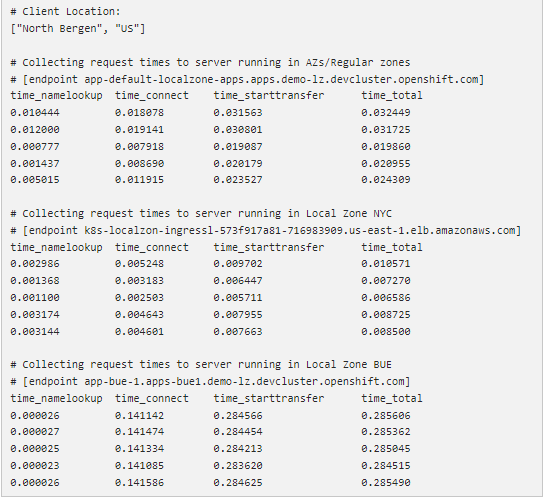

- Outcomes for the client based in the UK/London area:

- Outcomes for the customer based in the Brazil/South region:

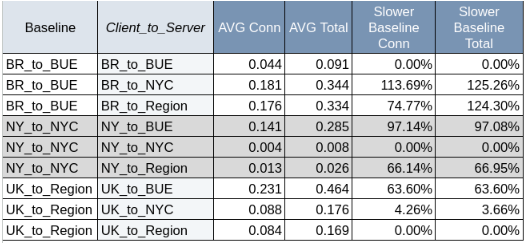

- Total outcomes:

The OpenShift edge node operating in the Local Zone required a total of milliseconds less to connect from the client located in NYC (outside of AWS) than the application operating in the regular zones. It’s also important to note that there are advantages for clients accessing from different nations. For example, due to the close proximity of the locations, the client in Brazil saw a decrease in the total request time when accessing the Buenos Aires deployment as opposed to using the Region’s app.